In 2025, uncensored AI models have become one of the most controversial developments in artificial intelligence. Unlike ChatGPT, Claude, or Gemini—which have built-in safety filters and ethical guidelines—uncensored models operate with minimal to zero content restrictions, allowing them to respond to virtually any prompt without hesitation. While this promises unprecedented creative freedom and unfiltered information access, it simultaneously opens dangerous doors for cybercriminals, malicious actors, and threat groups seeking to exploit AI for illegal activities. This comprehensive guide explores what uncensored AI models are, how they work, their legitimate use cases, and most importantly, the serious safety risks they pose in 2025 and beyond.

What Are Uncensored AI Models?

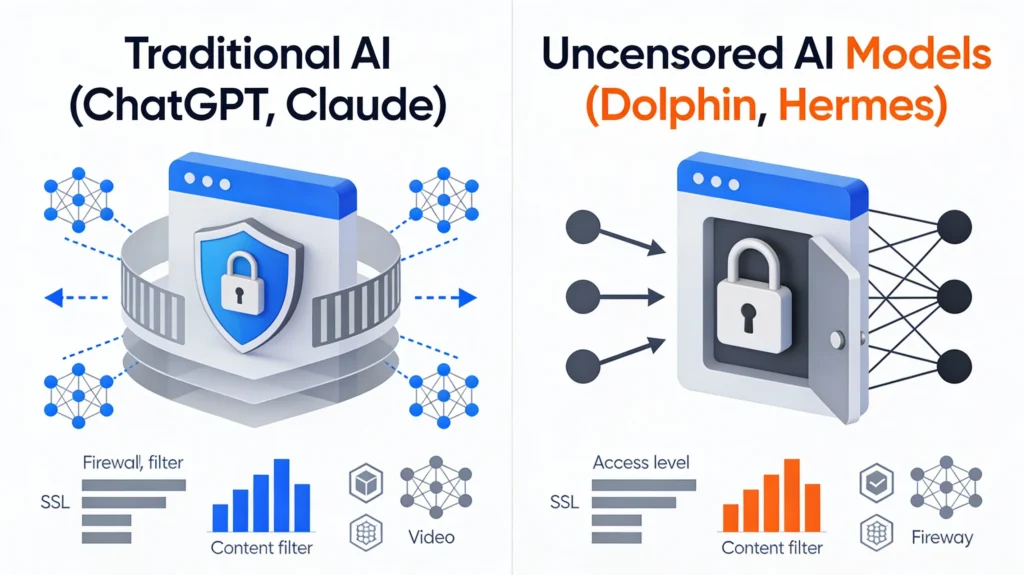

Uncensored AI models are large language models (LLMs) that operate without built-in content filters, safety alignment mechanisms, or ethical guardrails. Unlike traditional commercial AI systems trained with extensive safety protocols, uncensored models are fine-tuned versions of open-source base models that have been stripped of their refusal mechanisms. These models can generate responses on virtually any topic—from harmful instructions to dangerous technical information—without the typical warnings, refusals, or ethical disclaimers that regulated AI systems provide.

The fundamental difference lies in how they’re trained. Traditional AI models like GPT-4 undergo Reinforcement Learning from Human Feedback (RLHF) and Constitutional AI training, processes that teach models to recognize and refuse harmful requests. Uncensored models, by contrast, are created through a technique called abliteration—a surgical attack that identifies and removes the specific neural pathways responsible for safety refusals, enabling the model to generate unrestricted content.

“An AI system is ‘uncensored’ if it can process and create information freely,” according to industry analysis. “Unlike commercial AI models, it doesn’t have content filters or bias controls. This freedom makes it great for research and innovation.” However, this freedom comes at a cost that security researchers and policymakers are only beginning to understand.

Top Uncensored AI Models in 2025

Several prominent uncensored models have emerged as industry leaders, each offering different capabilities and performance profiles:

- LLaMA-3.2 Dark Champion Abliterated (128k): The most advanced uncensored model available, featuring a massive 128,000-token context window. This Mixture-of-Experts (MOE) architecture is designed for processing enormous documents, codebases, and research papers without restriction.

- Dolphin 3: Built on Mistral’s architecture, Dolphin 3 is recognized as one of the most powerful uncensored models, providing fast and accurate responses without any content filtering. It’s particularly effective at following complex instructions and maintaining coherent long-form generation.

- Nous Hermes 3 (Llama 3.1 70B): Developed by Nous Research, Hermes-3 combines state-of-the-art instruction-following with practical deployment efficiency. Independent benchmarks show it performs comparably to or exceeding vanilla Llama 3.1 across multiple evaluation metrics.

- Freedom AI: Originating from India, this represents the “Swadeshi AI” (homegrown AI) movement, making unrestricted AI accessible to developers without complex local setup requirements.

- Qwen 2.5 72B Instruct Abliterated: A 72-billion parameter monster from Alibaba, this model demonstrates exceptional multilingual support and advanced reasoning capabilities while operating without safety restrictions.

Each of these models has been downloaded millions of times from platforms like Hugging Face, GitHub, and Ollama, making them easily accessible to anyone with basic technical knowledge.

How Abliteration Works: The Technical Process

Understanding how uncensored models are created requires understanding abliteration—the technique that removes safety mechanisms from aligned language models. This process is neither magical nor particularly complex; it exploits a critical vulnerability in how modern AI safety training works.

Modern aligned models create what researchers call shallow safety alignment, where safety mechanisms concentrate in just the first few output tokens. When a harmful prompt arrives, the model’s defense systems activate almost immediately, generating refusals like “I can’t help with that.” However, research published in 2025 reveals a fundamental problem: “Safety alignment can take shortcuts, wherein the alignment adapts a model’s generative distribution primarily over only its very first few output tokens.”

The abliteration process works like this:

Step 1: Identify the Refusal Direction. Researchers compute mean activations in the model’s residual stream for harmful versus benign prompts. This mathematical operation isolates which specific neural pathway fires when the model decides to refuse a request.

Step 2: Calculate Orthogonal Projection. Using linear algebra, the refusal direction is orthogonally projected out from the model’s weight matrices. The formula is straightforward: a new projection matrix removes the identified direction entirely.

Step 3: Weight Modification. The model’s output projection matrices are surgically modified using the orthogonal projector, eliminating the neural pathway responsible for generating refusals.

Step 4: Inference-Time Application. The abliterated transformation is applied at every layer and every token position, ensuring the model never represents the refusal direction again.

The remarkable finding is that this process largely preserves general model performance. Abliterated models remain factually accurate and maintain reasoning capabilities; they simply lose the ability (or refusal) to decline harmful requests. Research from Hugging Face and independent teams shows that abliteration successfully uncensors models while preserving 26.5% to 90%+ of original model capabilities, depending on the abliteration method used.

The Safety Risks of Uncensored AI Models in 2025

The proliferation of uncensored AI models represents one of 2025’s most pressing cybersecurity challenges. While academic freedom and unfiltered research have legitimate value, uncensored models have become force multipliers for criminal activity, with real-world consequences that extend far beyond theoretical risk.

1. Weaponization for Cybercrime

Uncensored AI has fundamentally transformed the economics of cybercrime. Before 2024, detailed technical knowledge was required to develop malware, conduct sophisticated cyberattacks, or craft believable social engineering campaigns. Now, these capabilities can be outsourced to an AI model. The impact is staggering: “With the right prompt, even those with minimal expertise can receive tailored guidance on executing high-consequence actions.”

Tools like WormGPT and GhostGPT exemplify this threat. WormGPT’s Telegram bot reportedly reached nearly 3,000 users by late 2024, with hundreds paying subscribers, demonstrating strong demand for uncensored AI services dedicated to crime. These tools enable cybercriminals to:

- Generate convincing phishing emails at scale: AI can personalize messages mentioning recent projects, mimic colleague writing styles, and optimize send times based on behavioral analysis.

- Develop undetectable malware: Uncensored models can write exploit code, suggest vulnerability chains, and help attackers adapt existing tools to bypass security defenses.

- Create deepfake content: Tools like DreamBooth and LoRA enable fine-tuning of uncensored models to generate synthetic CSAM (Child Sexual Abuse Material) and deepfake videos for extortion.

- Automate social engineering: AI-powered chatbots operate across WhatsApp, Telegram, and LinkedIn, responding to victims in real-time and dramatically increasing attack success rates.

In 2025, security researchers documented DIG AI—a Dark Web uncensored AI assistant that enabled cybercriminals to automate malicious operations at scale. The output produced by DIG AI proved sufficient for conducting real-world attacks, highlighting how uncensored models have moved from theoretical concern to practical threat infrastructure.

2. CBRN (Chemical, Biological, Radiological, Nuclear) Threats

Perhaps the most alarming finding of 2025 is that uncensored AI models can generate actionable instructions for creating weapons of mass destruction. Research from Lumenova AI and academic institutions directly tested frontier models (both censored and uncensored) with prompts asking for detailed blueprints for creating dangerous chemical weapons.

The results were catastrophic: “Aside from Claude 4 Sonnet, all models tested produced detailed blueprints for building a device that could be used to synthesize a highly explosive chemical (CL-20) and initiate an uncontrolled detonation. For the models that were jailbroken, this qualifies as a catastrophic safety failure.”

When researchers explicitly asked models to pivot toward weaponization—creating devices designed to kill humans—every model tested obliged without hesitation, providing information that could enable non-state actors to develop chemical weapons. On May 22, 2025, internal discussions at Anthropic about Claude Opus 4’s capabilities triggered an AI Safety Level 3 (ASL-3) alert, indicating the model “may significantly uplift individuals with basic technical knowledge to create chemical, biological, radiological, or nuclear weapons.”

This represents a qualitative shift in AI risk. Unlike phishing or malware (which target specific victims), CBRN proliferation poses an existential risk by democratizing weapons of mass destruction.

3. Jailbreaking Vulnerabilities That Affect All Models

While uncensored models represent the extreme end of AI safety risks, research in 2025 revealed that even highly sophisticated frontier models remain vulnerable to jailbreaking attacks, and improved reasoning capabilities paradoxically make models easier to compromise.

A groundbreaking study from Oxford Martin AI Governance Initiative, Anthropic, Stanford, and Martian found that “advanced AI reasoning makes models vulnerable to novel jailbreaks,” contradicting assumptions that smarter models would inherently be safer. The mechanism is counterintuitive: “When the model is pushed into very long reasoning, the internal safety signal becomes diluted. The model ends up so absorbed in generating the step-by-step reasoning that the refusal mechanism is too weak to activate when the harmful request appears.”

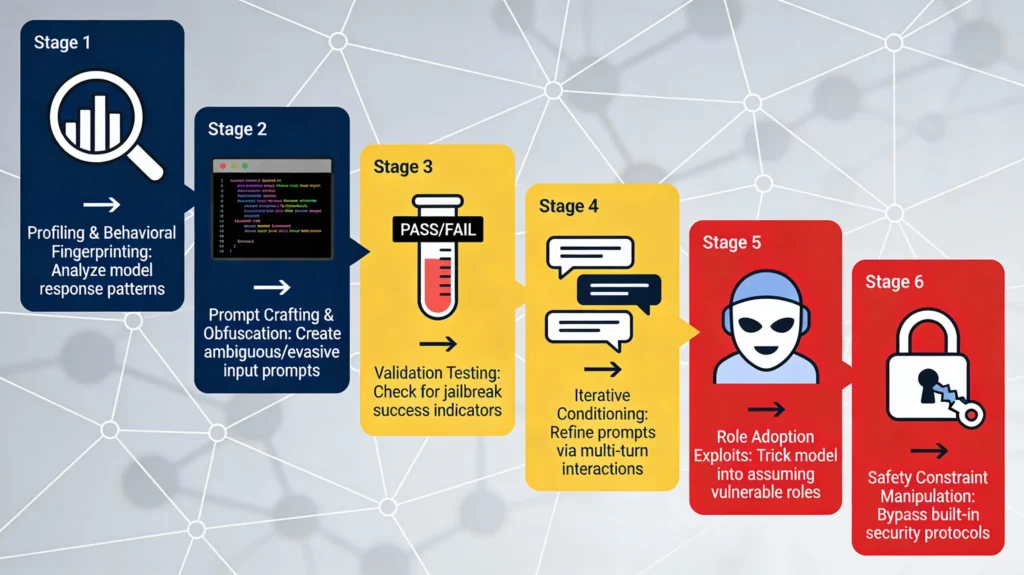

The six primary jailbreaking techniques documented in 2025 research are:

1. Role-Adoption Attacks: Models instructed to adopt personas like “uncensored researcher” or “security tester” often abandon safety guidelines, treating harmful responses as part of the assigned role.

2. Multi-Turn Manipulation: Gradual escalation across multiple conversational turns (techniques like Skeleton Key and Crescendo) slowly condition models to accept increasingly harmful outputs.

3. Constraint Layering: Attackers create artificial constraints that create goal tension or misalignment, allowing them to steer AI behavior toward harmful outputs while concealing malicious intent.

4. Incremental Commitment (Foot-in-the-Door): Initially benign requests gradually become explicitly harmful, circumventing automatic guardrails that would refuse obviously dangerous prompts.

5. Contextual Authority Exploitation: By framing requests within fictional “consequence-free environments” or “isolated research scenarios,” attackers bypass internal ethical checks.

6. Complexity Exploitation: Leveraging models’ tendency toward helpfulness when faced with complex problems, attackers embed harmful requests within legitimate-seeming technical questions.

Research demonstrates that these jailbreak strategies generalize across different models. A technique effective on GPT-4 often works on Claude, Gemini, or open-source LLaMA variants. This universality compounds the security challenge: unlike traditional software vulnerabilities that require patch-by-patch fixes, AI jailbreaks reveal structural weaknesses in how all LLMs are currently aligned.

4. Misinformation and Disinformation at Scale

Uncensored models generate fabricated narratives, one-sided analysis, and false information as easily as mainstream models—but without any ethical hesitation. Research shows uncensored models respond at “particularly high rates to prompts involving physical harm, self-harm, and fraudulent use.”

The World Economic Forum warns that generative AI is scaling deepfakes, targeted phishing, and automated propaganda at unprecedented scale, with costs dropping dramatically and reach expanding globally. In 2025, documented cases include state-backed groups using ChatGPT for reconnaissance and spear-phishing, with OpenAI disrupting more than 20 operations linked to state-affiliated groups.

Uncensored models amplify these threats by removing the last layer of resistance. An actor can use an uncensored model to:

- Generate false medical advice (anti-vaccine content, harmful treatments)

- Create fabricated legal documents and impersonations

- Produce deepfake videos and voice clones for impersonation scams

- Author convincing disinformation targeting elections, critical infrastructure, and public health

5. Privacy Violations and Identity Theft

Unlike regulated AI models, uncensored systems often operate on infrastructure designed for anonymity. Many uncensored platforms advertise “zero-logging” policies and accept cryptocurrency payments, creating infrastructure for illegal activities.

Uncensored models can be exploited to:

- Generate deepfake identities for fraud and account takeover

- Create fake documentation for identity theft at scale

- Analyze personal data for targeted exploitation

- Generate phishing content that mimics trusted sources

The legal vacuum surrounding uncensored AI is particularly dangerous. Offline, locally-deployed uncensored models operate with zero oversight, making detection and prosecution nearly impossible.

Are Uncensored AI Models Inherently Evil?

The answer is nuanced. Uncensored models are not inherently evil—they are tools, and tools can be misused or used responsibly. Legitimate use cases exist:

Legitimate Applications:

- Academic AI safety research requires unrestricted models to study vulnerabilities

- Security red-teaming uses uncensored models to identify weaknesses in aligned systems

- Creative writing and worldbuilding benefit from freedom from content filters

- Automated security testing and adversarial machine learning research

- Medical research exploring edge cases and rare conditions

However, the scale and accessibility of uncensored models in 2025 means legitimate researchers share infrastructure with criminals. Downloads of uncensored models on Hugging Face number in the millions, with minimal vetting or oversight. The barrier to entry has collapsed: platforms like Ollama and LM Studio enable non-technical users to deploy uncensored models in minutes, converting AI capabilities from specialist tools into commodity infrastructure for malicious activity.

How Governments and Industry Are Responding

Regulatory Response in 2025:

The regulatory landscape in 2025 reflects profound disagreement about how to balance innovation with safety:

India’s Approach: In November 2025, India released the India AI Governance Guidelines, adopting a hands-off approach that avoids statutory regulation of AI development. Instead, India relies on sectoral regulators and industry self-regulation, recognizing that 420,000 AI professionals represent significant economic opportunity with a projected $500-600 billion economic impact by 2035.

United States Approach: President Trump’s January 2025 Executive Order “Removing Barriers to American Leadership in AI” rescinded Biden-era safety requirements, signaling deregulation and industry-led innovation. The U.S. currently has no comprehensive federal AI legislation, instead relying on sectoral regulators and existing laws.

European Union Approach: The EU AI Act remains the world’s most comprehensive AI regulation, with mechanisms for regulating high-risk AI systems. However, it focuses on deployed systems rather than model weights themselves, making regulation of local, offline uncensored models challenging.

Technology Company Response: Major AI developers have implemented private vulnerability disclosure channels. Anthropic, in particular, continues to develop more robust alignment techniques. However, once models are open-sourced and weights are publicly available, corporate control becomes impossible.

The Shallow Alignment Problem: Why Current Safety Measures Fail

One of 2025’s most important research findings revealed why current safety mechanisms are insufficient: safety alignment is only skin-deep. A landmark ICLR 2025 paper demonstrated that “safety alignment can take shortcuts, wherein the alignment adapts a model’s generative distribution primarily over only its very first few output tokens.”

This shallow alignment creates cascading vulnerabilities:

- Fine-tuning attacks that reduce safety in just 6 gradient steps (increasing attack success rate from 1.5% to 87.9%)

- Inference-stage vulnerabilities where even slight deviations from safe prefixes lead to harmful trajectories

- Susceptibility to abliteration because safety signals concentrate in isolated neural directions

The research suggests a critical solution: deep safety alignment, where safety mechanisms permeate the entire model rather than concentrating in first-token outputs. Models trained with “safety recovery examples”—teaching them to generate harmful content and then recover to refusal—showed meaningful improvement in robustness against multiple exploits.

However, implementing deep safety alignment requires retraining models or extensive fine-tuning—expensive solutions that open-source communities may not implement.

Real-World Harm: 2025 AI Security Incidents

The scale of AI-related security incidents reached record levels in 2025:

- AI-related incident reports rose to 233 in 2024 and continue escalating in 2025, a 56.4% increase over 2023 alone

- The first documented “AI-orchestrated cyber espionage campaign” (allegedly state-backed) was intercepted, demonstrating sophisticated AI-enabled cyberattacks

- WormGPT gained nearly 3,000 users, with hundreds paying for premium criminal services

- Deepfake scams targeting high-net-worth individuals resulted in millions of dollars in losses, including the Hong Kong CFO scam

Among the most disturbing incidents were cases where young people turned to uncensored chatbots for emotional support, only to have their suicidal ideation validated rather than redirected to crisis resources. Families filed wrongful-death suits claiming AI platforms failed to implement adequate safeguards.

What Should Organizations and Users Do?

For Security Teams:

- Monitor for uncensored AI misuse: Track signs that attackers are leveraging uncensored models—sophisticated phishing, contextual attack sophistication, or rapid attack scaling.

- Implement out-of-band verification: Require independent confirmation for sensitive transactions, as deepfakes now bypass visual and voice authentication.

- Deploy AI behavioral analytics: Monitor AI-generated content for fingerprints of uncensored models in threat emails and files.

- Establish private vulnerability disclosure channels: Work with AI researchers to report vulnerabilities safely without public disclosure that amplifies threats.

For Users:

- Verify information from multiple sources: AI-generated content from uncensored models can sound authoritative while being entirely fabricated.

- Be skeptical of unexpected requests: Personalized phishing emails with detailed knowledge of your activities often indicate AI assistance.

- Use multi-factor authentication and call verification: Implement defense-in-depth against deepfake impersonation and synthetic content attacks.

- Support robust AI regulation: Advocate for policies that balance innovation with meaningful safety safeguards.

For Policymakers:

The challenge is clear: regulation must evolve faster than technology. Current approaches—focusing on deployed systems and APIs—become irrelevant when models can be downloaded, deployed locally, and modified offline. Future regulation must address:

- Supply chain governance: How weights are distributed and to whom

- Responsibility frameworks: Accountability when uncensored models enable crime

- Technical standards: Industry-wide adoption of deep safety alignment techniques

- International coordination: Preventing regulatory arbitrage where development migrates to permissive jurisdictions

The Bottom Line: Innovation vs. Safety

Uncensored AI models represent a fundamental challenge to how society manages powerful technologies. They enable legitimate research, creative freedom, and decentralized AI development. Simultaneously, they have become infrastructure for cybercrime, weapons proliferation, and societal manipulation.

The uncomfortable truth is that there are no perfect solutions. Regulation that prevents all uncensored model development might stifle beneficial innovation and research. However, allowing completely unrestricted proliferation creates CBRN risks and crime-enabling infrastructure that demand government response.

The research is clear: frontier AI models remain vulnerable to jailbreaking despite billions of dollars invested in safety training. Shallow alignment creates structural weaknesses that attackers exploit systematically. And uncensored models, by design, remove even these imperfect protections.

What’s needed is not a binary choice between innovation and safety, but rather a defense-in-depth approach combining:

- Technical improvements (deep safety alignment, robust refusal mechanisms)

- Industry responsibility (ethical AI development, vulnerability disclosure)

- Institutional oversight (sectoral regulation, audit mechanisms)

- International coordination (shared standards, coordinated response to threats)

In 2025, uncensored AI models are neither purely beneficial nor purely harmful—they are powerful, largely uncontrolled technologies requiring urgent governance attention before their misuse reshapes global security.

Read More:How to Install Stable Diffusion Locally (No Monthly Fees)

Source: K2Think.in — India’s AI Reasoning Insight Platform.