The landscape of artificial intelligence reasoning has fundamentally transformed in 2025, and at the center of this revolution stands K2-Think—a groundbreaking 32-billion-parameter model from Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) and G42 that’s rewriting the rules of efficient AI reasoning. What makes K2-Think remarkable isn’t just its compact size, but how it achieves performance matching or surpassing models with over 120 billion parameters through strategic prompt engineering and advanced inference techniques. This achievement demonstrates a critical truth: in modern AI systems, how you ask questions matters as much as the model’s raw power.

For developers, researchers, and AI practitioners working with K2-Think, understanding effective prompt engineering techniques isn’t optional—it’s essential for unlocking the model’s full potential. The model excels in mathematical reasoning with state-of-the-art scores on AIME 2024 (90.83%), AIME 2025 (81.24%), and HMMT 2025 (73.75%), while maintaining strong performance in code generation and scientific reasoning. These results stem from six technical pillars including long chain-of-thought supervised fine-tuning, reinforcement learning with verifiable rewards, and a novel “Plan-Before-You-Think” approach to test-time computation.

Understanding K2-Think’s Architecture and Capabilities

K2-Think represents a paradigm shift from the “bigger is better” mentality that dominated AI development for years. Built on the Qwen2.5 base model, it demonstrates that smaller, strategically trained models can compete with frontier systems through intelligent post-training recipes and test-time computation enhancements. The model runs at an impressive 2,000 tokens per second on Cerebras Wafer-Scale Engine hardware, making it not only accurate but also remarkably fast for real-world deployment.

The system’s development followed three distinct phases. Phase one involved supervised fine-tuning on curated long chain-of-thought reasoning traces, using the AM-Thinking-v1-Distilled dataset comprising mathematical reasoning, code generation, scientific reasoning, and instruction-following tasks. Performance improved rapidly within the first third of training, particularly on mathematics benchmarks, before plateauing—providing valuable signals for the next optimization stage.

Phase two introduced reinforcement learning with verifiable rewards (RLVR) across six domains: mathematics, code, science, logic, simulation, and tabular tasks. This approach uses deterministic, rule-based verification—checking whether answers are mathematically correct or code passes unit tests—rather than subjective human judgments. RLVR proved essential because it allows models to learn from exploration without ambiguous feedback, directly comparing solutions to ground truth and incentivizing correct reasoning paths.

Phase three implemented test-time computation strategies including agentic planning and Best-of-N sampling. Unlike traditional models that apply the same computational effort regardless of question complexity, K2-Think adaptively allocates resources during inference, spending more “think time” on difficult problems—analogous to a student carefully working through a challenging exam question rather than rushing to answer.

Read More: K2-Think Limitations Exposed: Shocking Flaws in UAE’s AI Model Revealed

Others:K2-Think AI: Ultimate Guide to Access & Use on HuggingFace and API (2025)

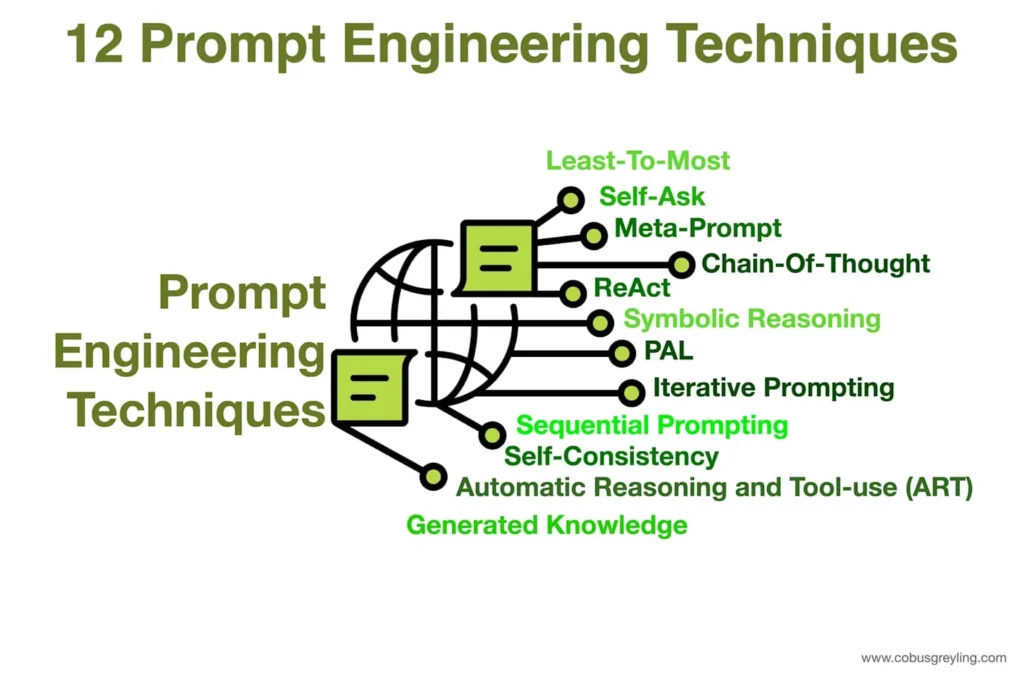

Core Prompt Engineering Techniques That Work with K2-Think

Zero-Shot Chain-of-Thought Prompting

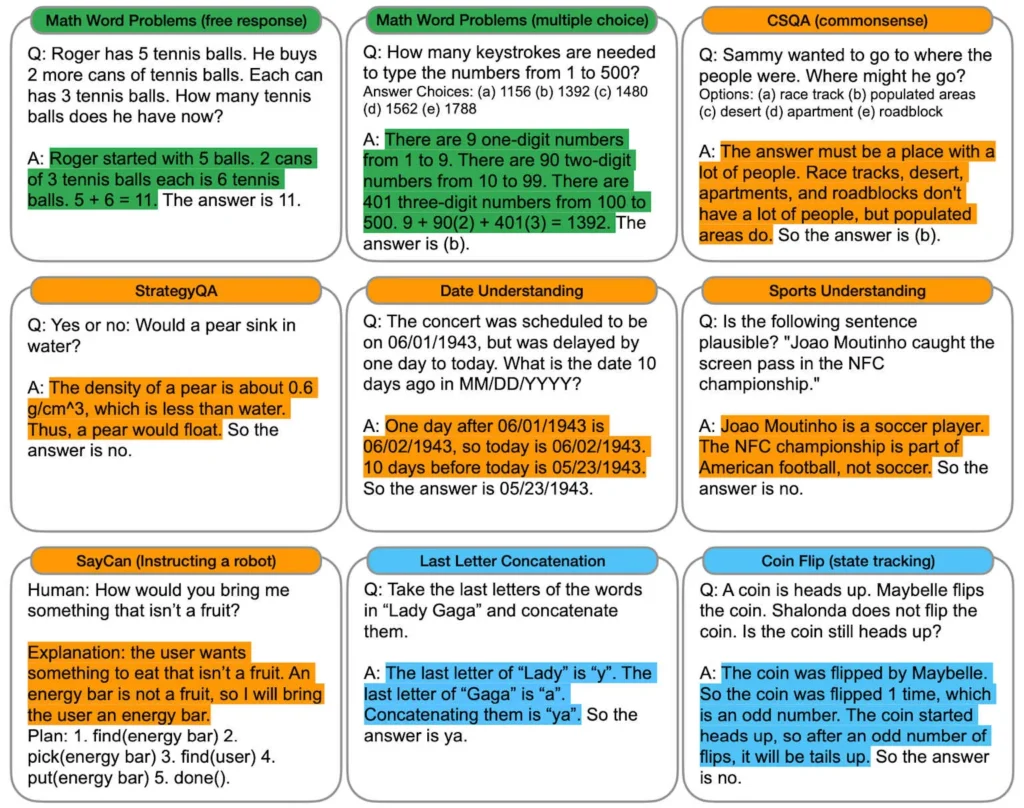

The simplest yet most powerful technique for K2-Think involves adding explicit reasoning instructions to your prompts. By appending phrases like “Let’s think step by step” or “Describe your reasoning step by step,” you trigger the model’s chain-of-thought capabilities without providing any examples.

Research from Google DeepMind’s seminal 2022 paper demonstrated that CoT prompting significantly outperformed standard techniques on arithmetic, commonsense, and symbolic reasoning benchmarks. For K2-Think, this approach is particularly effective because the model was specifically trained on long chain-of-thought sequences during its supervised fine-tuning phase.

Real-World Example:

Standard prompt: “If all roses are flowers, and some flowers fade quickly, can we conclude that some roses fade quickly?”

Chain-of-Thought prompt: “If all roses are flowers, and some flowers fade quickly, can we conclude that some roses fade quickly? Explain your reasoning step by step.”

The CoT version guides K2-Think to articulate its logical process: identifying the premises, recognizing the logical structure, and explaining why the conclusion doesn’t necessarily follow (because “some flowers” doesn’t specify which flowers, so we can’t definitively conclude about roses).

For mathematical problems, this technique becomes even more powerful. When presented with “John has one pizza cut into eight equal slices. John eats three slices, and his friend eats two slices. How many slices are left? Explain your reasoning step by step,” K2-Think breaks down the calculation: started with 8 slices, subtract 3 eaten by John (leaving 5), subtract 2 eaten by friend (leaving 3), final answer is 3 slices.

Few-Shot Prompting for Structured Outputs

Few-shot prompting involves providing 2-5 carefully chosen examples within your prompt to demonstrate the desired input-output pattern. This technique proves essential when you need K2-Think to produce outputs in specific formats that are difficult to describe purely through instructions.

The structure of your examples matters tremendously. Organize them consistently—for instance, using an input: classification format ensures the model generates single-word responses rather than complete sentences. Research shows that diverse examples covering different aspects of your task help the model generalize better to new inputs, avoiding overfitting to similar patterns.

Real-World Example for Sentiment Classification:

textReview: "Great product! Exceeded expectations." → Positive

Review: "Terrible experience, very disappointed." → Negative

Review: "It works okay, nothing special." → Neutral

Review: "Absolutely love it, will buy again!" → Positive

Review: "It doesn't work!" → ?

By showing K2-Think these four examples with clear input-output mappings, the model recognizes the classification pattern and correctly categorizes the final review as “Negative”. The key is selecting representative examples that span the range of cases you’ll encounter—here including strongly positive, strongly negative, neutral, and enthusiastically positive reviews.

For code generation tasks, few-shot prompting accelerates development significantly. Show K2-Think how to calculate squares in Python with one example, and it can then figure out how to compute cubes, adapting the pattern to similar programming challenges. This capability makes K2-Think invaluable for software developers solving related problems quickly without extensive prompt engineering for each variation.

Role Prompting for Domain-Specific Expertise

Role prompting assigns a specific persona or professional identity to K2-Think, guiding the model to draw on relevant knowledge and communication styles associated with that role. This technique proves particularly effective for domain-specific tasks where specialized expertise influences both the content and tone of responses.

Research demonstrates that non-intimate interpersonal roles (school, social, workplace settings) yield better results than purely occupational roles, and gender-neutral terms generally lead to superior performance. A two-stage approach works best: first assign the role with specific details, then present your question or task.

Real-World Examples:

Technical Analysis:

“You are a senior software architect with 15 years of experience in distributed systems. Review this microservices architecture and identify potential scalability bottlenecks. Consider database connection pooling, API rate limiting, and inter-service communication patterns.”

This role prompt activates K2-Think’s knowledge about enterprise architecture patterns, system design principles, and performance optimization strategies, resulting in responses that consider real-world production constraints rather than theoretical ideals.

Financial Planning:

“You are a certified financial planner. Explain portfolio diversification to a 25-year-old novice investor who wants to start investing. Use simple language and provide actionable steps.”

By assigning the financial planner role, K2-Think adopts an educational tone, breaks down complex concepts, and tailors explanations to the specified audience level. The model balances technical accuracy with accessibility, avoiding jargon while maintaining credibility.

Customer Service:

“You are a customer service agent for a luxury hotel chain, recognized for exceptional service and problem-solving. Respond empathetically to a guest complaint about room temperature issues, offering immediate solutions and follow-up actions.”

This role prompt guides K2-Think to balance professionalism with empathy, acknowledge the guest’s concerns, and provide concrete resolution steps—mirroring how experienced hospitality professionals handle complaints.

Best practices for role prompting include adding specific experience details (“15 years in digital marketing”), incorporating relevant personality traits (“enthusiastic coach who inspires through positive reinforcement”), and establishing clear context about the setting or constraints.

Plan-Before-You-Think: K2-Think’s Innovative Approach

The “Plan-Before-You-Think” technique represents one of K2-Think’s most original contributions to prompt engineering. This approach implements a planning agent that analyzes the input query, extracts key concepts, and creates a high-level plan before the reasoning model begins working on the problem.

This deliberation phase has roots in cognitive science research on human problem-solving, where planning is considered a meta-thinking process that develops structure to guide one’s thoughts. For K2-Think, this manifests as restructuring the prompt to outline relevant concepts and strategic approach before diving into detailed reasoning.

How It Works:

- The planning agent receives the original query

- It identifies core concepts and requirements

- It generates a structured plan highlighting key steps

- The augmented prompt (original query + plan) feeds into K2-Think

- The model generates its response guided by the plan

Real-World Impact:

Research shows that Plan-Before-You-Think improves performance by 4-6 percentage points across mathematics, code, and science benchmarks when combined with Best-of-N sampling. Remarkably, this approach also reduces response lengths by up to 12% compared to the base model—meaning better answers with fewer tokens, improving both quality and efficiency.

For AIME 2024 benchmark, scores improved from 86.26% (supervised fine-tuning + reinforcement learning alone) to 90.83% when Plan-Before-You-Think was added with Best-of-3 sampling. Similar gains appeared across AIME 2025 and HMMT 2025 benchmarks, validating the approach’s effectiveness on competition-level mathematical reasoning.

The K2-Think team experimented extensively with this technique, testing over 30 different system prompts using few-shot learning, role-playing, and situational prompting. However, they found negligible gains from these variations, ultimately using simpler planning instructions with temperature set to 1.0. This finding suggests that for the planning phase, straightforward concept extraction and high-level structuring works better than elaborate prompt engineering.

Best-of-N Sampling for Critical Tasks

Best-of-N (BoN) sampling generates multiple candidate responses and selects the highest-quality answer through pairwise comparison. This test-time scaling technique trades computational resources for improved accuracy, making it ideal for high-stakes applications where correctness matters more than speed.

For K2-Think, implementing BoN sampling involves generating N responses (typically 3-16 depending on computational budget), then using comparison mechanisms to identify the best answer. Research demonstrates that this approach yields consistent performance improvements across benchmarks, with the gains amplifying when combined with Plan-Before-You-Think.

Performance Impact:

On AIME 2024, K2-Think’s score jumped from 86.26% without test-time computation to 90.83% with Plan-Before-You-Think and Best-of-3 sampling—a gain of 4.57 percentage points. For AIME 2025, the improvement was similarly substantial, rising from approximately 77% to 81.24%.

The technique works because it allows the model to explore multiple solution paths and self-correct. In mathematical reasoning, one approach might get stuck in an incorrect logical branch, while another successfully navigates to the right answer. By generating several attempts, you increase the probability that at least one reaches the correct solution.

Implementation Considerations:

The computational cost scales linearly with N—generating 5 responses takes roughly 5 times as long as generating one. However, K2-Think’s exceptional inference speed (2,000 tokens/second on Cerebras hardware) makes this practical for many applications. A 32,000-token response that would take 160 seconds on typical H100/H200 GPU setups completes in just 16 seconds on Cerebras, making multi-sample generation feasible for interactive use.

Temperature tuning surprisingly showed minimal impact in K2-Think’s experiments. Testing temperatures from 0.1 to 1.0 revealed negligible overall improvement, leading the team to standardize on temperature 1.0 for all production runs. This finding simplifies implementation—you don’t need to fine-tune temperature parameters for different problem types.

Auto Chain-of-Thought for Diverse Problem Sets

Auto Chain-of-Thought (Auto-CoT) automates the creation of diverse reasoning demonstrations. Instead of manually crafting examples for few-shot prompting, this technique clusters questions from your dataset, selects representative examples from each cluster, and generates reasoning chains using zero-shot CoT.

The two-step process ensures diversity—a critical factor for few-shot prompting effectiveness. By clustering questions before sampling, Auto-CoT prevents selecting overly similar examples that could lead to overfitting. Each cluster represents a distinct problem type, ensuring the model encounters varied reasoning patterns during demonstration.

Step 1: Question Clustering

Questions from your dataset divide into clusters based on similarity. This might group algebraic word problems together, geometry proofs in another cluster, and combinatorics puzzles in a third. The clustering mitigates the risk of providing examples that are too homogeneous.

Step 2: Demonstration Sampling

From each cluster, Auto-CoT selects one question and generates a reasoning chain using a zero-shot-CoT prompt like “Let’s think step by step”. These generated demonstrations become your few-shot examples, creating a diverse set of reasoning patterns without manual curation.

This approach proves especially valuable when working with K2-Think on varied problem sets where manually designing examples for every question type becomes impractical. The automated nature means you can quickly adapt to new domains or problem categories without extensive prompt engineering effort.

Advanced Techniques for Production Deployments

Reinforcement Learning with Verifiable Rewards

Understanding how K2-Think was trained reveals important insights for prompt engineering. The model’s reinforcement learning phase used verifiable rewards—objective, binary signals (1 for correct, 0 for incorrect) based on programmatic verification rather than human judgment.

For mathematical problems, this means checking whether the answer matches the ground truth. For code generation, it involves running the code against test cases and verifying all tests pass. This approach eliminates ambiguity and prevents “reward hacking” where models exploit flaws in subjective reward systems.

Key Principles That Inform Prompting:

When crafting prompts for K2-Think, structure tasks to have clear correctness criteria where possible. The model was trained to pursue verifiable objectives, so prompts that specify measurable success conditions typically perform better. For instance, “Calculate the area of a circle with radius 5cm, showing your work and providing the numerical answer” gives K2-Think a clear target compared to “Tell me about circles.”

Research shows that reinforcement learning from verifiable rewards can extend reasoning boundaries beyond what supervised fine-tuning alone achieves. K2-Think’s training dynamics incentivized correct reasoning early in the process, with substantial improvements in reasoning quality confirmed through extensive evaluations. Understanding this helps explain why the model excels at step-by-step logical problems where each step can be verified against mathematical or logical rules.

Test-Time Compute Strategies

K2-Think embodies the shift from static, one-size-fits-all inference to adaptive, complexity-aware processing. Test-time compute refers to the computational effort expended during inference, with modern systems dynamically allocating resources based on question difficulty.

This mirrors human problem-solving behavior—spending more time on challenging problems and less on simple ones. Research demonstrates that when models use additional inference steps on complex tasks, performance improves significantly. OpenAI’s o1 series demonstrated material improvement across benchmarks by using reinforcement learning to tune for sophisticated reasoning capabilities, allowing models to think and review before answering.

Implications for Prompt Engineering:

For routine queries, keep prompts straightforward and let K2-Think respond quickly. For complex, high-stakes problems, explicitly encourage thorough reasoning: “This is a difficult problem requiring careful analysis. Work through this step-by-step, checking each step before proceeding to the next.”

The model’s architecture supports variable inference depth, meaning it can naturally adapt its processing based on task complexity when prompted appropriately. This adaptive capability is particularly important for applications like virtual assistants or analytical systems that encounter questions ranging from trivial to highly complex.

Temperature 1.0 has become the recommended default for K2-Think’s reasoning mode. While lower temperatures (0.1-0.3) might seem intuitive for precise tasks, K2-Think’s training and architecture perform optimally with higher temperature during reasoning, allowing exploration of solution spaces while the underlying reasoning structure maintains logical coherence.

Domain-Specific Applications and Examples

Mathematical Reasoning

K2-Think achieves exceptional performance on mathematical benchmarks, making it ideal for educational applications, mathematical research, and automated problem-solving. The model scored 90.83% on AIME 2024, 81.24% on AIME 2025, and 73.75% on HMMT 2025—competition-level mathematics that challenges even talented human students.

Effective Prompt Structure:

“Solve the following problem step-by-step, showing all work:

A laptop costs $850. There’s a 20% discount today, then 8% sales tax applies to the discounted price. What’s the final amount I pay?

First, calculate the discount amount. Then, find the discounted price. Finally, calculate the tax and add it to get the final price.”

This structured prompt guides K2-Think through logical stages while allowing its reasoning capabilities to handle the calculations. The model typically responds by explicitly working through each step: discount amount = $850 × 0.20 = $170, discounted price = $850 – $170 = $680, tax = $680 × 0.08 = $54.40, final price = $680 + $54.40 = $734.40.

Code Generation and Debugging

K2-Think scored 63.97% on LiveCodeBench v5, significantly outperforming similarly-sized models and demonstrating strong competitive programming capabilities. The model excels at algorithmic tasks, code optimization, and bug identification when prompted effectively.

Effective Prompt Structure:

“You are an experienced software engineer. Review this Python function and identify performance bottlenecks:

[Insert code block]

Analyze the algorithm’s time complexity, identify inefficient operations, and suggest specific optimizations with code examples.”

For debugging tasks, chain-of-thought prompting proves particularly valuable: “This code produces incorrect output. Debug it step-by-step: First, trace the execution with example inputs. Then, identify where the logic fails. Finally, suggest the corrected code with explanation.”

Scientific Reasoning

K2-Think achieved 71.08% on GPQA-Diamond, demonstrating versatility beyond pure mathematics into scientific domains. This capability supports research analysis, hypothesis evaluation, and scientific literature comprehension.

Effective Prompt Structure:

“As a research scientist, analyze this experimental setup:

[Describe experiment]

Evaluate the methodology’s strengths and limitations. Consider potential confounding variables, sample size adequacy, and statistical power. Suggest improvements to increase validity.”

The role prompting combined with specific analytical dimensions guides K2-Think to provide structured, comprehensive scientific critique rather than surface-level observations.

Best Practices and Common Pitfalls

Structure Your Prompts Clearly

Use delimiters like dashes or quotation marks to separate different prompt components. Number your steps for complex tasks, and explicitly state desired output format. Clear structure helps K2-Think parse your intent accurately and organize its response accordingly.

Do this: “Task: Summarize this article in three bullet points. Article: [text]. Format: – Bullet 1\n- Bullet 2\n- Bullet 3”

Not this: “Can you maybe summarize this article I’m including here in some kind of list format [text]”

Use Action-Oriented Instructions

Start prompts with strong action verbs: Generate, Summarize, Analyze, Calculate, Translate. Avoid weak openings like “Can you…” or “I need…” which dilute the directive’s clarity. Treat prompts as function calls—direct, specific, purposeful.

Avoid Jargon When Possible

Simple, accessible language typically produces better results than technical jargon. Write prompts as if explaining to someone intelligent but unfamiliar with your specific domain. K2-Think’s training covered diverse domains, but clarity ensures consistent performance across contexts.

Set Clear Goals and Success Criteria

Specific objectives yield better responses than vague requests. Instead of “Write about customer retention,” specify “Analyze three evidence-based strategies to improve customer retention rates in SaaS companies, citing research where available.”

Quote Sources When Accuracy Matters

Directing K2-Think to cite its reasoning or reference specific parts of provided context reduces hallucination risk. For fact-based tasks, explicitly request: “Base your analysis only on the provided data. If information is missing, state that clearly rather than making assumptions.”

Iterate and Refine

Prompt engineering is iterative. Test your prompts, analyze outputs, and refine based on results. What works for one problem type may need adjustment for others. K2-Think’s open-source nature facilitates experimentation—you can test variations quickly and measure performance objectively.

Common Pitfalls to Avoid

Overcomplicating prompts: More words don’t mean better results. Concise, clear prompts often outperform verbose, over-detailed ones.

Mixing multiple unrelated tasks: Break complex requests into sequential prompts rather than cramming everything into one.

Neglecting temperature settings: While K2-Think recommends temperature 1.0 for reasoning tasks, verify this works for your specific use case.

Ignoring model strengths: K2-Think excels at mathematical reasoning, code generation, and structured problem-solving. Prompts that leverage these strengths perform better than those requiring capabilities outside the model’s training focus.

Measuring Success and Optimization

Establishing Baselines

Before optimizing prompts, establish baseline performance. Run your initial prompt multiple times (at least 5-10 iterations) and measure: accuracy, response time, token usage, and response quality consistency. This baseline provides objective comparison points for evaluating improvements.

A/B Testing Prompt Variations

Systematically test prompt variations against your baseline. Change one element at a time—wording, structure, examples, or instructions—to isolate what drives performance changes. Document results quantitatively where possible, especially for tasks with verifiable outputs.

Leveraging K2-Think’s Speed Advantage

K2-Think’s 2,000 tokens/second throughput on Cerebras hardware makes extensive testing practical. What would take hours on standard GPU infrastructure completes in minutes, enabling rapid iteration and comprehensive prompt testing. This speed advantage particularly benefits production deployments where quick experimentation accelerates optimization cycles.

Conclusion: The Future of Prompt Engineering with K2-Think

K2-Think demonstrates that the future of AI reasoning lies not in ever-larger models, but in smarter training, strategic prompt engineering, and adaptive inference. At just 32 billion parameters, it achieves results that rival systems 20 times its size, proving that efficiency and intelligence can coexist.

The techniques covered here—chain-of-thought prompting, few-shot learning, role assignment, Plan-Before-You-Think, Best-of-N sampling, and Auto-CoT—represent battle-tested approaches that leverage K2-Think’s unique architecture. These methods aren’t theoretical; they’re the same strategies used to achieve state-of-the-art benchmark results on AIME, HMMT, and GPQA-Diamond.

As AI systems continue evolving, prompt engineering will remain crucial for extracting maximum value from models. K2-Think’s open-source nature—with freely available weights, training code, and complete transparency—creates unprecedented opportunities for practitioners to experiment, understand, and optimize their prompting strategies. The model’s accessibility through platforms like Hugging Face, combined with its exceptional inference speed, lowers barriers to entry for developers, researchers, and organizations seeking world-class reasoning capabilities.

Whether you’re building educational tools, developing code analysis systems, conducting scientific research, or deploying business intelligence applications, effective prompt engineering with K2-Think unlocks capabilities that were recently exclusive to proprietary, resource-intensive systems. The examples and techniques presented here provide a foundation for your own experimentation and optimization, adapted to your specific use cases and requirements.

The shift from “bigger models” to “smarter prompting” represents more than technical innovation—it’s the democratization of advanced AI reasoning. K2-Think proves that with the right architecture, training approach, and prompt engineering, smaller models can compete at the frontier, making sophisticated AI accessible to broader audiences and applications than ever before.

Source: K2Think.in — India’s AI Reasoning Insight Platform.