The uncomfortable truth: ChatGPT is not compliant with GDPR, stores your data indefinitely, and 63% of user information contains personally identifiable details that regulators found unprotected. While OpenAI claims to offer privacy controls, a federal court order forced the company to retain deleted conversations indefinitely, exposing millions of users to data retention practices that contradict their own deletion promises. The good news: three powerful alternatives—Claude, Mistral AI, and PrivateGPT—offer genuine privacy protections without sacrificing functionality.

The ChatGPT Data Problem: What You Need to Know

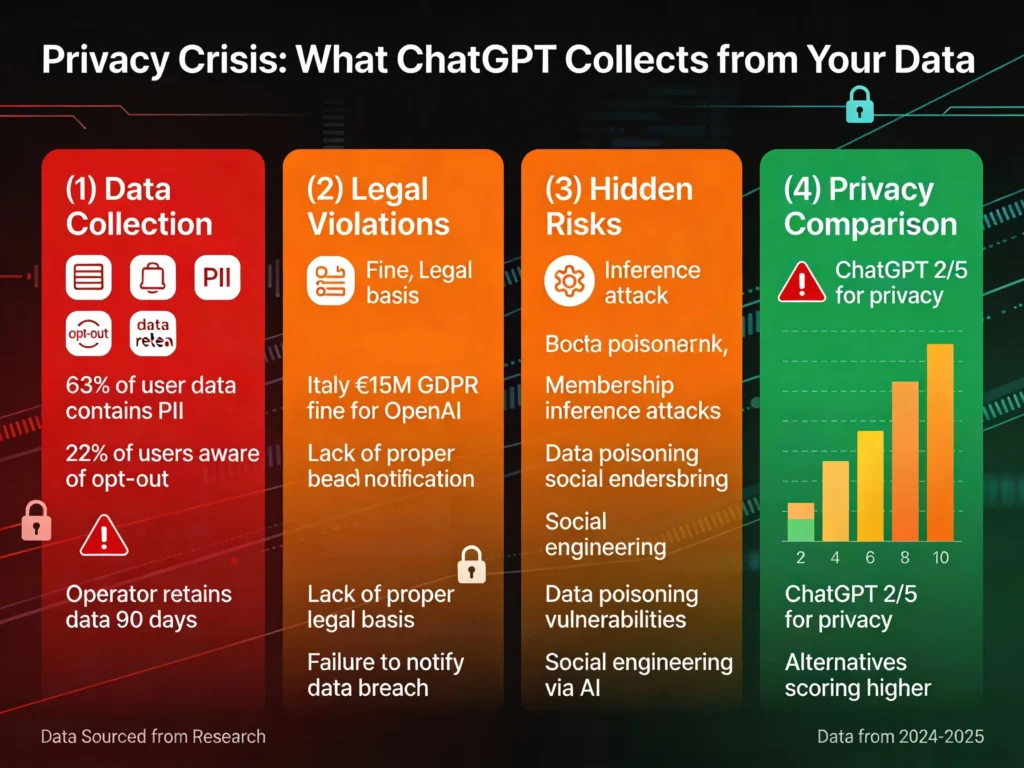

What ChatGPT Actually Collects from You

Every time you type a question into ChatGPT, OpenAI stores far more than just your words. The company collects profile details including your name, email address, phone numbers, and payment information for Plus subscribers. But the scope goes deeper. Technical metadata including IP addresses, browser types, operating systems, and approximate geolocation are automatically logged. The platform also tracks interaction patterns like frequency of use, session durations, feature preferences, subscription tiers, transaction histories, and API usage metrics.

What makes this particularly troubling is the company’s admission that these conversations are stored indefinitely—not for a set period, but forever—unless users manually delete them. Even worse, once deleted, ChatGPT systems retain these conversations for another 30 days before purging them. However, a federal court order from October 2025 changed everything. In litigation initiated by The New York Times, Judge Ona T. Wang ordered OpenAI to preserve all consumer data indefinitely, meaning conversations you deleted are now legally required to remain on OpenAI’s servers.

ChatGPT’s GDPR Failure: The €15 Million Violation

In December 2024, Italy’s Data Protection Authority (Garante) issued the first major GDPR fine against OpenAI for ChatGPT violations—€15 million—establishing a legal precedent that shattered the myth of OpenAI’s compliance. The violation wasn’t a technical oversight; it was systematic non-compliance with foundational privacy principles.

What OpenAI Did Wrong:

OpenAI trained ChatGPT using European users’ personal data without identifying a proper legal basis under GDPR Article 6. The company failed to provide clear information about how data is collected, stored, or deleted—violating the transparency principle in GDPR Article 5. Perhaps most damning, OpenAI neglected to notify Italian authorities about a data breach that occurred in March 2023, a mandatory reporting requirement under GDPR Article 33. Additionally, the company permitted children under 13 to register without age verification mechanisms, potentially violating COPPA regulations designed to protect minors.

The ruling didn’t stop at financial penalties. OpenAI is now required to conduct a six-month public education campaign explaining how ChatGPT works and how user data is processed. The company must also implement stricter privacy protections across all products. As of February 2025, ChatGPT remains non-compliant with GDPR due to its indefinite retention of user prompts, which directly conflicts with GDPR’s “storage limitation” principle.

The New Privacy Scandal: The Operator Problem

Released in November 2025, ChatGPT’s “Operator” agent represents a dramatic escalation in data collection. Unlike standard ChatGPT interactions, Operator retains deleted screenshots and browsing histories for 90 days—three times longer than standard ChatGPT interactions. This AI agent browses the web on your behalf, capturing visual evidence of your screen, search history, and online behavior for nearly three months.

This extended retention period raises serious legal questions. Users believe they’re using a temporary feature, but their browsing activity is being preserved and potentially flagged for manual review by OpenAI employees—creating a surveillance mechanism that users never explicitly agreed to.

What The Data Actually Reveals: Hidden Vulnerabilities

Beyond simple data collection, security researchers have identified three critical ways ChatGPT’s data practices create systemic risks:

Membership Inference Attacks: Attackers can query ChatGPT and, by analyzing its response patterns, determine whether specific sensitive information was used in training. This technique has successfully exposed medical records, financial data, and personal details that were “anonymized” but can be re-identified. Research from NDSS 2025 demonstrates that minority groups experience higher privacy leakage in these attacks, as models tend to memorize more about smaller subgroups.

Data Poisoning Risks: Studies from CMU’s CyLab (2025) showed that manipulating just 0.1% of a model’s training dataset is sufficient to launch effective data poisoning attacks. If ChatGPT’s training data includes compromised or malicious information, the entire model becomes unreliable. Researchers demonstrated that poisoned data can reduce classification accuracy by up to 27% in image recognition tasks.

Shadow AI Breaches: In 2025, 20% of data breaches involved “shadow AI”—employees uploading confidential business data to public ChatGPT without IT approval. These breaches carry a $670K premium in remediation costs compared to standard breaches. Companies believe they’re securing enterprise data while employees bypass security policies to use ChatGPT’s free version.

The 3 Secure AI Alternatives That Protect Your Privacy

Alternative #1: Claude 3 (Anthropic) — Opt-In Privacy Model

Privacy Model: Anthropic takes a fundamentally different approach from OpenAI. By default, Claude does not train on your conversations without explicit consent. When Anthropic needed to update its training policy in late 2025, the company implemented a clear opt-in mechanism—though notably, new users have the setting pre-enabled and must actively disable it.

Data Protection:

- Incognito chats are never used for training, even if you’ve enabled model improvement.

- Feedback is de-linked from your user ID before Anthropic uses it for research.

- Conversations are encrypted in transit and at rest using industry-standard protocols.

- Anthropic uses differential privacy techniques during model training to prevent the model from memorizing sensitive personal information or copyrighted content.

Compliance: While not as stringent as Mistral, Claude provides more privacy protection than ChatGPT. The company emphasizes that employee access to conversations is restricted—only designated Trust & Safety team members may review conversations on a need-to-know basis, and this access is strictly audited.

Limitations: Anthropic still processes personal data including your IP address and technical information. The company reserves the right to share information with service providers and corporate partners in the event of business transactions (mergers, bankruptcy). Additionally, data used for training cannot be retracted once processing begins.

Alternative #2: Mistral AI (Le Chat) — European Privacy-First Design

Why Mistral Stands Out:

Mistral AI, headquartered in Paris, was built from inception with GDPR compliance as the foundation—not an afterthought. The company explicitly states: “Your data is not used to train our models” for the Pro version. This isn’t marketing language; it’s enforceable by Data Processing Agreements (DPA) that organizations can sign to ensure contractual compliance.

Technical Privacy Features:

- EU-only data hosting: All data processing occurs exclusively within European Union infrastructure, eliminating third-country data transfers that violate GDPR.

- No data training on Pro: Unlike Claude, Mistral’s premium tier has contractually binding commitments not to use your inputs for model improvement.

- Self-hosting capability: For maximum control, organizations can self-host Mistral’s open-source models entirely on their own infrastructure.

- Privacy by design principles: Mistral implements data minimization (collects only essential data), transparency, and built-in access controls.

Regulatory Alignment:

Mistral publishes Data Processing Agreements that satisfy GDPR Article 28 requirements. The company’s infrastructure design prevents vendor lock-in—you can export your data and move to another provider without technical barriers. As of February 2025, Mistral remains the only major AI provider with full GDPR compliance certification for general-purpose AI.

Important caveat: While Mistral emphasizes European privacy, the company does collect and process your IP address to “enhance your experience” based on country-specific data. Users can opt out of this processing via preferences.

Alternative #3: PrivateGPT — The Open-Source Local Solution

For Maximum Privacy, Run AI Locally:

PrivateGPT represents the ultimate privacy control: your conversations never leave your computer. This open-source project allows you to download and run large language models entirely offline on your personal machine using models like Llama 2, Mistral 7B, or GPT-Neo.

How It Works:

PrivateGPT uses a “pluggable architecture” that lets you:

- Chat with your documents (PDFs, Word files, emails, spreadsheets) completely privately

- Switch between different AI models through simple configuration files without touching code

- Run everything on your hardware—no cloud services, no data transmission, no eavesdropping

You can even customize the underlying model backend using Ollama, LlamaCPP, or HuggingFace—giving you complete transparency into which AI model processes your data.

Installation & Setup (2025):

The installation process requires basic technical comfort:

bashgit clone https://github.com/zylon-ai/private-gpt.git

cd private-gpt

pip install -r requirements.txt

poetry run privategpt

Once running, PrivateGPT provides a ChatGPT-like interface but with zero data transmission. Your prompts are processed entirely on your machine using local GPU acceleration (or CPU if needed).

Privacy Guarantee:

There are no logs on external servers. Your data exists only in three places:

- Your initial input (stays on your machine)

- The processing (occurs on your machine)

- The response (generated on your machine)

GDPR compliance is automatic because you’re the sole data controller—data never leaves your jurisdiction.

Limitations: PrivateGPT requires sufficient hardware (GPU recommended for speed) and technical setup knowledge. The models, while capable, are smaller than enterprise Claude or GPT-4, so response quality may be lower for complex tasks. However, accuracy improves monthly as open-source models evolve.

Other Privacy-Focused Options: The Honorable Mentions

Proton Lumo: Zero-Access Encryption

Proton Mail’s new AI assistant, Lumo, launched in July 2025 with zero-access encryption and no server-side logs. Your conversations are encrypted on your device before being sent to Proton’s servers using bidirectional asymmetric encryption. The company explicitly states that Lumo will not use your conversations to train its models, and all processing occurs from European datacenters.

However, it’s important to understand the nuance: while stored chats are encrypted, Proton’s GPU servers must decrypt your prompts to process them—so Proton theoretically could be compelled to access decrypted messages if a government demands it. This is still substantially better than ChatGPT’s practice, but it’s not the absolute privacy guarantee of local AI.

DuckDuckGo’s Duck.ai: Anonymous Access

DuckDuckGo’s Duck.ai chatbot offers access to multiple models (GPT-4o mini, Claude 3 Haiku, Llama 3.3, Mistral Small 3) without requiring an account. The service removes your IP address through proxying before sending requests to underlying model providers.

Key Privacy Features:

- No account required

- Conversations stored only on your device (not on DuckDuckGo servers)

- IP anonymization before reaching model providers

- Explicit agreements ensuring model providers delete prompts within 30 days

The tradeoff: you’re still using third-party AI models (OpenAI, Anthropic, etc.), so some data does flow to those providers—but DuckDuckGo acts as a privacy intermediary, removing identifying information.

Ollama: Local Model Framework

For developers, Ollama provides the simplest way to run large language models locally. Pull any model from repositories and run it immediately:

bashollama run mistral

ollama run llama2

ollama run neural-chat

Ollama handles GPU optimization automatically and provides a REST API for integration with other applications. Like PrivateGPT, it offers complete privacy through local processing.

Critical Data Types You Should NEVER Share With ChatGPT

Regardless of OpenAI’s privacy claims, certain information should never be entered into any cloud-based AI system without encryption:

- Passwords and API Keys: ChatGPT’s storage systems could be breached, exposing your credentials

- Medical Records (PHI): Sharing patient information violates HIPAA unless using ChatGPT Enterprise with proper Data Processing Agreements

- Financial Data: Bank details, credit card information, and investment strategies should remain private

- Trade Secrets: Proprietary business processes, source code, and strategic plans permanently compromise competitive advantage

- Personal Identification: Social Security Numbers, driver’s license details, passport information

- Legal Documents: Attorney-client privileged communications, contracts, litigation strategies

- Children’s Information: Any data that could identify individuals under 13, given COPPA violations

The safest practice: Use pseudonyms, anonymize sensitive details, and employ temporary chat mode for sensitive queries.

How the EU AI Act Will Force Change

The EU AI Act, becoming mandatory in 2027, will create stricter obligations for AI providers. Unlike GDPR, which focuses on data protection, the AI Act specifically mandates:

- Conformity Assessments: High-risk AI systems must undergo third-party audits before deployment

- Transparency Requirements: AI providers must disclose exactly how systems work, what data they process, and how to contest AI decisions

- Fundamental Rights Impact Assessments: For high-risk systems, companies must demonstrate they don’t violate human rights

- Human Oversight Requirements: Critical decisions cannot be made by AI alone—at least two humans must verify AI-generated decisions for high-risk systems.

OpenAI’s business model—using vast amounts of user-generated data to train ever-larger models—will become economically unsustainable under these frameworks. Expect major changes by 2026-2027 as companies prepare for AI Act compliance.

The Practical Privacy Framework: 3-2-1 Rule for AI Usage

To maintain privacy while using AI tools, implement this framework:

3 seconds to decide: Before sharing any information with ChatGPT or any cloud AI, spend 3 seconds asking: “Would I be comfortable if this data appeared in the news?”

2 weeks maximum: Review your saved conversations every two weeks. Delete sensitive interactions immediately rather than relying on automatic deletion.

1 monthly cleanup: Perform a monthly privacy audit. Check your AI tool’s settings, verify opt-out preferences are still enabled, and review what data you’ve shared across platforms.

For sensitive work, skip cloud AI entirely and use PrivateGPT or Ollama running on local hardware.

Conclusion: Privacy is a Choice, Not a Default

The uncomfortable reality is that ChatGPT’s data practices are not exceptional—they reflect a business model where user data becomes training material and corporate assets. The €15 million GDPR fine against OpenAI signals that regulators worldwide are beginning to enforce privacy standards. The New York Times litigation forcing indefinite data retention demonstrates that even “deleted” data isn’t truly deleted.

But you have options. Mistral AI proves that powerful AI doesn’t require compromising privacy. Claude shows that transparent, opt-in data practices are possible at enterprise scale. PrivateGPT and Ollama demonstrate that local processing eliminates the entire privacy problem by design.

The choice is yours: continue using ChatGPT and accept that your data feeds corporate training pipelines, or migrate to alternatives that align with your privacy values. As AI becomes more integrated into daily life, the cost of privacy negligence compounds. Starting today with these alternatives protects not just your current privacy, but your future autonomy.

Read More:Prompt Engineering Guide: 10 “Copy-Paste” Prompts to Make AI Write Like a Human.

Source: K2Think.in — India’s AI Reasoning Insight Platform.