For decades, the legal industry has struggled with one persistent problem: contract review is slow, expensive, and prone to human error.

Lawyers often spend hours reading dense legal documents, searching for specific clauses, and flagging potential risks. This repetitive work consumes time that could otherwise be spent on strategic legal thinking.

For law firms and in-house legal teams, NDA review alone represents a significant drain on resources, especially when volumes scale.

Artificial intelligence is now changing this reality. Advanced language models such as Claude 3 can analyze contracts in seconds, extracting key clauses and highlighting risks with consistent accuracy.

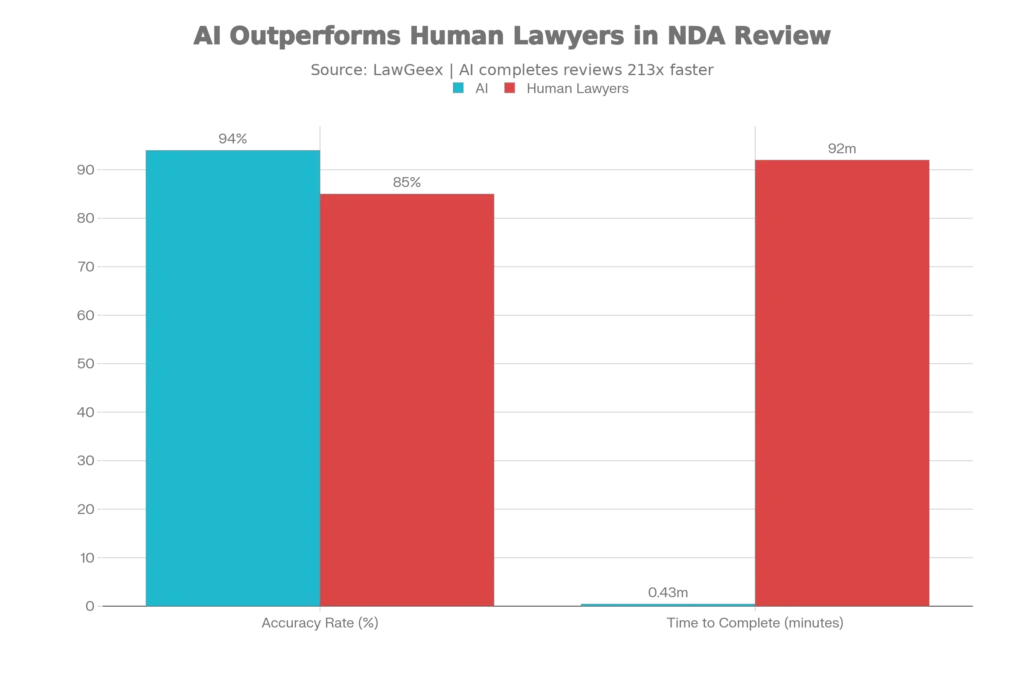

A widely cited study by LawGeex demonstrated the impact clearly. In controlled NDA review tests, AI achieved 94% accuracy, compared to 85% for experienced lawyers. The AI completed its review in 26 seconds, while human reviewers averaged 92 minutes.

This shift does not replace legal professionals. Instead, it reshapes legal workflows, allowing lawyers to focus on negotiation, judgment, and high-value decision-making rather than repetitive document analysis.

The Business Case: Why Traditional NDA Review Is Breaking Legal Departments

The Hidden Cost of Manual Review

Every non-disclosure agreement that passes through a legal department costs more than most executives realize. Studies show that the average cost to draft, review, negotiate, and file a single NDA ranges from $114 to $456, with hourly rates for legal review typically falling between $200 and $350 per hour. For organizations handling even modest volumes, these costs multiply rapidly.

The real problem isn’t just the direct cost of attorney time. Legal teams report spending up to 60% of their time on routine NDA reviews, leaving limited capacity for strategic work that actually drives business value. A typical NDA review takes between 2 to 4 hours of focused legal time, during which an experienced attorney must carefully examine each clause, check against organizational standards, flag deviations, and prepare redline suggestions. When a company processes 500 NDAs annually—which isn’t unusual for technology companies, venture capital firms, or large enterprises—the math becomes staggering.

According to industry benchmarking, an organization processing 500 contracts per year using traditional manual review would spend approximately 1,250 to 2,000 attorney hours annually, translating to $350,000 to $568,750 in direct legal costs. Add support staff time, external counsel fees, overhead, and compliance risks from missed clauses, and the annual burden easily exceeds $724,500. Yet despite this enormous investment, human reviewers still miss critical issues due to fatigue, time pressure, and the cognitive limits of processing hundreds of pages of dense legal language.

The Risk Profile of Manual Review

Beyond costs, manual review introduces unacceptable risk. Studies consistently show that experienced lawyers reviewing NDAs under realistic conditions—with competing deadlines, email distractions, and the pressure to move deals forward—achieve only 85% accuracy in identifying contractual issues. This 15% error rate means that critical risk factors, non-standard liability caps, restrictive governing law clauses, or problematic indemnification language can slip through undetected.

The consequences compound. A single missed clause in a confidentiality agreement could expose proprietary information, while overlooked termination language might lock an organization into unfavorable terms. One compliance gap can trigger regulatory penalties far exceeding the cost of proper legal review. For enterprises managing thousands of contracts, this risk profile becomes existential.

Claude 3: A New Standard for Legal Document Analysis

Understanding Claude’s Architecture and Capabilities

Claude 3 represents a fundamental shift in how AI approaches legal document analysis. Developed by Anthropic, the Claude 3 family includes three models scaled for different use cases: Haiku for speed, Sonnet for balance, and Opus for maximum reasoning capability. Each model incorporates constitutional AI training, a methodology emphasizing safety, honesty, and transparency—critical characteristics for legal applications where accuracy directly impacts business outcomes.

The breakthrough capability distinguishing Claude 3 for legal work is its context window of 200,000 tokens. This means Claude can simultaneously analyze an entire contract, related agreements, regulatory requirements, and organizational playbooks without losing thread or requiring document chunking. A lawyer could upload a 100-page commercial agreement alongside a proprietary 50-page policy manual and ask Claude to identify every deviation—something that would require hours of manual cross-referencing.

Claude 3 Sonnet achieves 78.8% accuracy on LegalBench benchmark testing, a comprehensive evaluation designed by Stanford and legal experts to measure legal reasoning capability. Claude 3 Opus, the most capable model, performs even stronger with demonstrated ability to handle multi-step legal analysis and complex clause interactions. These benchmarks matter because they’re validated by legal experts, not just marketing claims.

How Claude Performs NDA Review in Practice

When Claude reviews an NDA, it executes a structured workflow that mirrors sophisticated legal analysis. The model first extracts key clauses—identifying definition sections, restrictions on use, permitted recipients, term and termination provisions, remedies, and governing law. Natural language processing capabilities allow Claude to understand not just the literal text, but the contextual meaning and legal implications of each section.

Next, Claude compares extracted terms against a customizable playbook—organizational standards that define acceptable liability caps, preferred governing jurisdictions, required indemnification language, and non-negotiable insurance requirements. This playbook-driven analysis ensures consistency across hundreds or thousands of reviews, eliminating the human variability that causes some lawyers to flag issues others miss.

Claude then assigns risk scores to identified deviations, flagging low-risk, medium-risk, and high-risk items separately. A deviation like “liability capped at 2x annual contract value instead of 1x” gets flagged as a negotiable point. A deviation like “exclusive jurisdiction in a non-US court without mutual agreement” gets marked high-risk. This prioritization ensures legal teams focus review time where it matters most.

Finally, Claude generates both executive-level summaries and detailed redline suggestions. A one-page summary with red/yellow/green risk indicators can go to business teams, while detailed analysis with specific contract language recommendations goes to lawyers. The entire review maintains full auditability—decision-makers can see exactly what the AI flagged and why.

Real-World Performance Data on Contract Review

The performance improvements quantify dramatically. A mid-sized US law firm implementing Claude AI for bulk NDA and MSA analysis saw average review time drop from 4 hours to 55 minutes per contract, a 77% improvement. An enterprise financial services company using Claude for M&A due diligence discovered it could process twice as many contracts per week without adding headcount.

But performance metrics go beyond speed. Studies show AI-powered contract review using advanced language models catches between 45 to 60 issues per contract compared to 15-20 issues identified through manual review—a 3x improvement in issue detection rate. This isn’t because AI is being paranoid; it’s because systematic scanning of every clause catches inconsistencies, conflicting provisions, and subtle deviations that would require reading the contract five times to spot manually.

The LawGeex benchmark—conducted by Stanford legal experts pitting AI against 20 experienced lawyers reviewing NDAs—provides the most rigorous validation. AI achieved 94% accuracy using the F-measure (harmonic mean of precision and recall), the statistical standard for this type of comparison, while experienced lawyers averaged only 85%. On speed, AI completed the task in 26 seconds versus 92 minutes for lawyers—a 212x improvement. Even the fastest human reviewer took 51 minutes, still 117 times longer than AI.

The Financial Impact: ROI That Justifies Implementation

Cost Savings Across Multiple Categories

When organizations implement AI-driven contract review, cost reduction emerges across every budget line. Attorney time represents the largest savings opportunity. AI completes initial contract analysis in 2-5 minutes, identifying key terms and flagging deviations. Attorneys then review only flagged sections and exceptions rather than reading entire documents.

For a 500-contract organization, attorney time drops from 1,250-2,000 hours annually to 150-375 hours—a 80-85% reduction. At the average in-house attorney rate of $350 per hour, this saves $385,000 to $568,750 per organization annually. This doesn’t mean attorneys work less; instead, they shift from routine review to negotiation, risk assessment, and strategic contract optimization.

Support staff requirements decline equally dramatically. Legal assistants spending 35-50 minutes per contract managing intake, filing, and tracking reduction drop to 5-10 minutes—an 80-90% improvement. For a 500-contract volume, this saves $28,000-$31,000 annually.

External counsel costs disappear entirely for routine reviews. Many organizations previously outsourced bulk NDA review to outside counsel at $400-$1,200 per hour—a terrible value for routine work. With AI handling volume, internal teams eliminate these outsourcing expenses. In our modeled scenario, this saves approximately $120,000 annually.

Overhead reduction comes from decreased physical file storage, printing, archival management, and real estate needs. Many organizations still maintain large file rooms for contract storage; AI-enabled digital management reduces this to marginal costs, saving approximately 40% of overhead—roughly $50,000+ annually for mid-sized organizations.

Complete ROI Calculation: Why Implementation Pays for Itself in Weeks

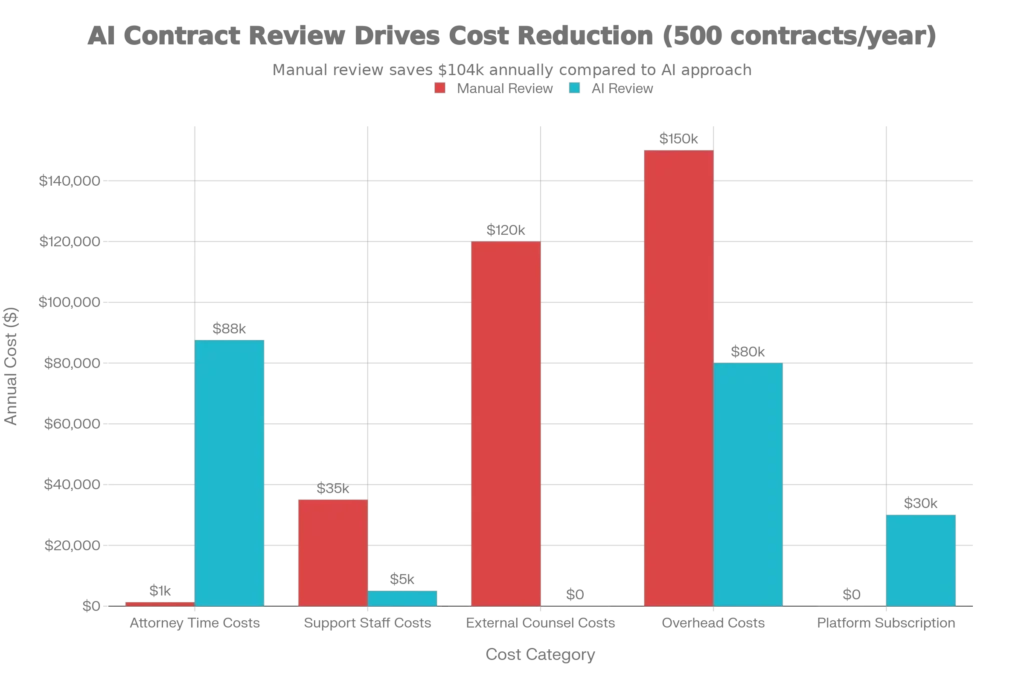

The total financial picture becomes compelling. For the same 500-contract annual volume, manual review costs approximately $724,500 including attorney time ($412,500), support staff ($35,000), external counsel ($120,000), overhead ($150,000), and hiring costs ($7,000). AI review costs approximately $202,500 including attorney time for exception handling ($87,500), reduced support staff ($5,000), no external counsel ($0), reduced overhead ($80,000), and platform subscription ($30,000).

Annual savings: $522,000 (72% cost reduction).

The platform investment typically ranges from $30,000-$50,000 annually depending on whether you build custom integration or use existing solutions. Given savings of $522,000, the payback period is literally less than one month. Most organizations see 1,740% ROI in the first year.

Even at modest volumes—just 100 contracts annually—AI saves approximately $100,000 versus manual review, representing 200-300% annual return on a $30,000 platform investment. The break-even point is surprisingly low: approximately 30-40 contracts annually depending on complexity.

How to Implement Claude 3 for NDA Review: A Practical Framework

Step 1: Choose the Right Claude Model and Deployment

Claude 3 Sonnet offers the optimal balance for most legal organizations—it’s significantly more capable than Haiku for complex contract analysis, but costs less than Opus while delivering exceptional performance. Sonnet achieves 78.8% accuracy on legal benchmarks and processes information at remarkable speed, sufficient for high-volume NDA review.

For implementation, you have three primary options: (1) Claude.ai via web interface for immediate testing with Claude Pro or Enterprise subscriptions, (2) Claude API for custom integration with document management systems and workflow automation, or (3) third-party platforms like Zapier, Microsoft Power Automate, or legal-specific tools that abstract the API complexity.

For enterprises, API deployment offers the most flexibility. You can integrate Claude with existing contract management systems, route different contract types to different analysis playbooks, and maintain full audit trails of every review decision. The Commercial Terms of Service explicitly permit organizations to retain full ownership of outputs generated through Claude’s services, with expanded copyright indemnity protecting against infringement claims—critical for legal work.

Step 2: Build Your Legal Playbook

The playbook is your organization’s legal “personality” encoded into the AI system. It defines: (1) acceptable liability caps (e.g., “liability limited to 1x annual contract value”), (2) preferred governing law and dispute resolution (e.g., “disputes resolved in Delaware courts under Delaware law”), (3) required insurance requirements (e.g., “minimum $5M general liability”), (4) indemnification standards, and (5) non-negotiable data protection clauses for GDPR or CCPA compliance.

Your playbook should reference internal policies, represent risk appetite approved by leadership, and reflect past negotiation outcomes. Effective playbooks typically run 5-20 pages of structured guidance rather than novel legal analysis. The more specific your playbook, the more consistent and predictable Claude’s analysis becomes.

Step 3: Start with NDAs, Then Scale

Begin with non-disclosure agreements, the highest-volume contract type and the use case where AI delivers the most obvious value. Process 20-30 sample NDAs with Claude, then have your legal team review the analysis and provide feedback. This calibration period usually requires 1-2 weeks.

Once the team is confident, process your entire backlog of pending NDAs. Organizations typically see remarkable velocity improvements here—50-100 NDAs per week become processable by one attorney with AI support versus one attorney processing 10-15 NDAs weekly manually.

After NDA mastery, expand to MSAs (Master Service Agreements), vendor contracts, employment agreements, and IP-related documents in sequence. Each contract type requires playbook refinement, but the underlying workflow remains consistent.

Step 4: Set Up Review Workflow and Audit Trails

Implement a three-tier routing system: (1) low-risk contracts (minor deviations, favorable terms) route to paralegals for final approval, (2) medium-risk contracts route to senior associates for negotiation preparation, and (3) high-risk contracts go to partners for strategy assessment. This ensures experienced attorneys concentrate on contracts where judgment truly matters.

Maintain complete audit trails showing the original contract, Claude’s analysis, the playbook used, any human edits, and final approval. This documentation protects against regulatory challenges, supports compliance audits, and provides invaluable historical data showing how contract terms evolved.

Step 5: Train Your Team and Manage Change

Lawyer adoption of AI contract review usually faces resistance rooted in two concerns: (1) fear that AI will make mistakes, and (2) perception that technology undermines their expertise. Address both directly through demonstration.

Show lawyers how Claude’s analysis surfaces issues they would have missed under time pressure. Have them review Claude’s first 20 analyses and compare against their own review—the data typically shows Claude catches more issues while requiring less time. This evidence shifts perception from “AI threatens my job” to “AI makes my job easier and more valuable.”

Invest in 4-6 hours of training covering: how to read Claude’s analysis and risk ratings, how to prepare contracts for submission (PDF best practices), how to customize playbooks, and how to integrate AI output into existing document management systems.

The Technical Architecture: Why Claude Excels at Legal Analysis

Natural Language Processing and Semantic Understanding

Legal documents present unique challenges that general AI models struggle with. Contracts contain domain-specific terminology (“indemnification,” “force majeure,” “non-solicitation”), embedded references to external documents, and deliberately ambiguous language designed to create negotiation space. Claude’s training includes substantial legal corpus exposure and instruction-tuning on legal reasoning tasks, enabling it to parse this complexity.

When Claude processes an NDA, it doesn’t just pattern-match keywords. It understands semantic relationships—knowing that “the receiving party shall not disclose confidential information” means something very different than “the receiving party may not disclose confidential information except as required by law.” This nuanced comprehension comes from transformer-based architecture with attention mechanisms specifically trained to recognize legal language patterns.

Machine Learning for Pattern Recognition and Risk Assessment

Beyond understanding individual clauses, Claude identifies patterns across contracts. After processing hundreds of NDAs, it recognizes common deviation patterns—certain companies consistently demand shorter confidentiality terms, others always request carve-outs for public domain information, some always want mutual agreements while others demand unilateral protection.

This pattern recognition feeds into machine learning-powered risk scoring. Rather than purely rule-based flagging (“if liability cap < $5M, flag high-risk”), Claude assesses contextualized risk—a $2M liability cap might be acceptable for a vendor contract with $1M annual spend but unacceptable for a $500M strategic partnership.

The Extended Thinking Advantage

Claude’s newest versions include Extended Thinking mode, where the model can reason through complex problems step-by-step, showing intermediate reasoning visible to users. For legal analysis, this means Claude can explain not just “this clause is problematic,” but “this clause is problematic because it combines section 3.2’s confidentiality restrictions with section 5.1’s termination rights, creating ambiguity about whether information destroyed at termination becomes available for disclosure within 30 days of destruction under section 6.3.”

This transparent reasoning builds trust—lawyers can verify Claude’s logic rather than accepting a black-box judgment. Extended Thinking with Claude Opus 4 achieves 79.3% accuracy on legal benchmarks, slightly outperforming standard mode at 78.1%.

Comparison with Alternatives: Why Claude 3 Stands Out for Legal Work

Claude vs. ChatGPT for Contract Review

ChatGPT offers superior versatility and broader ecosystem integration, making it the default choice for general business applications. However, for legal contract analysis, Claude’s advantages become decisive.

Claude’s 200,000 token context window versus ChatGPT’s 128,000 tokens means Claude can simultaneously analyze a contract alongside related agreements, policies, and regulatory guidance without fragmentation. For a lawyer reviewing a 50-page vendor agreement against a 30-page organizational policy and 20-page relevant GDPR guidance, Claude’s context handling is transformative while ChatGPT requires chunking and multiple prompts.

Claude also delivers more cautious, structured analysis emphasizing accuracy over speed—exactly what legal work demands. ChatGPT often aims to be helpful and creative, sometimes introducing speculative reasoning that’s inappropriate for legal review. Claude prioritizes factual accuracy and transparent limitation acknowledgment.

On pricing, Claude’s $20-80 monthly tier for Pro and Team plans costs less than ChatGPT’s equivalent tiers while delivering legal-domain performance that outmatches general-purpose capabilities.

Legal-Specific Tools: Why Claude Often Wins

Platforms like Kira Systems, Relativity, and specialized legal AI tools offer deep contract analysis and compliance-focused features. However, these tools often require substantial setup, come with enterprise pricing ($100K+ annually), and struggle with contracts outside their training domain.

Claude offers flexibility without the deployment burden. You can start reviewing NDAs immediately through the web interface, requiring no IT integration, licensing negotiations, or vendor selection cycles. When you’re ready for integration, Claude’s API is developer-friendly and costs predictably.

This combination—immediate usability, deep legal reasoning, large context windows, and transparent pricing—explains why multiple organizations now run Claude-primary workflows rather than purpose-built legal AI platforms.

Implementation Challenges and How to Address Them

Data Privacy and Enterprise Security

Organizations handling confidential contracts worry about data security when using cloud AI services. Claude addresses this directly: Enterprise customers can opt out of data sharing for model improvement—information remains confidential and is not used to train models. All Claude deployments comply with SOC 2 Type II and GDPR requirements.

For maximum security, deploy Claude via virtual private cloud (VPC) with restricted access. Anthropic supports this configuration through enterprise partnerships, ensuring contracts never route through public infrastructure.

Accuracy and the Irreducible Need for Human Review

Claude is extraordinarily capable—94% accuracy on NDAs, matching or exceeding human performance on many legal reasoning tasks. Yet claiming 100% accuracy would be foolish. AI makes mistakes, sometimes confidently. The solution isn’t avoiding AI or trusting it blindly—it’s implementing AI as a first-draft reviewer with mandatory attorney review of complex, high-stakes contracts.

One firm successfully implemented this model: NDAs with no deviations or minor deviations route to paralegals for approval—90% AI, 10% human. NDAs with significant deviations route to senior attorneys for negotiation strategy—AI surfaces the issues, humans make business judgments. This two-tier system maintains legal rigor while capturing 80-85% time savings.

Integration with Existing Systems

Claude works through the web interface, API, or third-party platforms. For immediate value without IT overhead, start with web interface and manual uploads. When you need automation, API integration typically takes 2-4 weeks through standard developers or legal tech implementation partners. Most integration costs fall below $50,000, trivial compared to savings.

Popular integration points: uploading contracts from document management systems, routing flagged contracts based on risk levels to email or Slack, syncing analysis to contract lifecycle management platforms, and generating compliance reports.

Measuring Success: KPIs That Matter for Legal Teams

Successful Claude implementation should track: (1) review cycle time (target: 60% reduction from hours to minutes), (2) issue detection accuracy (target: 3x more issues caught than manual review), (3) processing cost per contract (target: 60% reduction), (4) attorney time per contract (target: 80% reduction), and (5) compliance rate (target: 99%+ adherence to organizational standards).

Dashboard analytics should show these metrics weekly, enabling legal leaders to quantify value for executive discussions. The most compelling metric: cost savings. An organization processing 500 NDAs annually sees approximately $522,000 in annual savings—a number that resonates across finance, operations, and executive leadership.

Beyond financial metrics, track stakeholder satisfaction. Are business teams happy with turnaround times? Do attorneys report less burnout from routine review? Are compliance teams confident in risk identification? These qualitative metrics often drive long-term adoption and budget allocation.

Future Directions: The Evolution of Legal AI

The legal AI landscape evolves rapidly. Claude 3.7 Sonnet with Extended Thinking mode represents the current frontier, but development continues. Anthropic is investing in legal-specific instruction tuning, where Claude trains on curated legal reasoning examples to enhance domain-specific performance. Future versions will likely achieve 95%+ accuracy on legal benchmarks while improving reasoning transparency.

The broader trend toward agent-based AI will enable more autonomous contract workflows. Rather than processing individual documents, Claude agents could autonomously negotiate standard terms, request redlines aligned with organizational playbooks, and escalate complex issues to humans. Early experiments show Claude agents completing multi-step legal workflows with minimal human intervention.

Regulatory frameworks will evolve too. The EU AI Act will impose compliance requirements on legal AI systems, particularly those flagging as “high-risk.” Organizations using Claude benefit from Anthropic’s proactive compliance efforts and transparent governance practices.

Conclusion: The Business Case Is Resolved

The question is no longer whether AI can review contracts effectively—the LawGeex study, academic research, and dozens of real-world implementations answer that affirmatively. AI reviews NDAs more accurately, faster, and cheaper than experienced lawyers. Claude 3 specifically delivers this capability through a large context window, constitutional training emphasizing safety, and demonstrated legal reasoning that outperforms general-purpose AI.

The practical decision facing legal departments is simpler: start immediately or accept $500K+ annual opportunity cost. A mid-sized organization can begin Claude-powered NDA review within two weeks, see positive results within one month, and achieve positive ROI in 30-45 days. The risk of not implementing exceeds the risk of thoughtful implementation with attorney oversight.

For in-house counsel, law firms, and corporations managing contract volumes, Claude 3 represents the first genuinely transformative tool in decades—one that frees legal professionals from routine document scanning to focus on judgment-dependent work that actually requires human expertise. That shift, more than the cost savings alone, explains adoption momentum. Lawyers don’t want to spend three hours per day reading contracts. They want to practice law.

Read More:Customer Support Bots: The Ultimate Showdown — Intercom Fin vs. Custom AI Agents

Source: K2Think.in — India’s AI Reasoning Insight Platform.