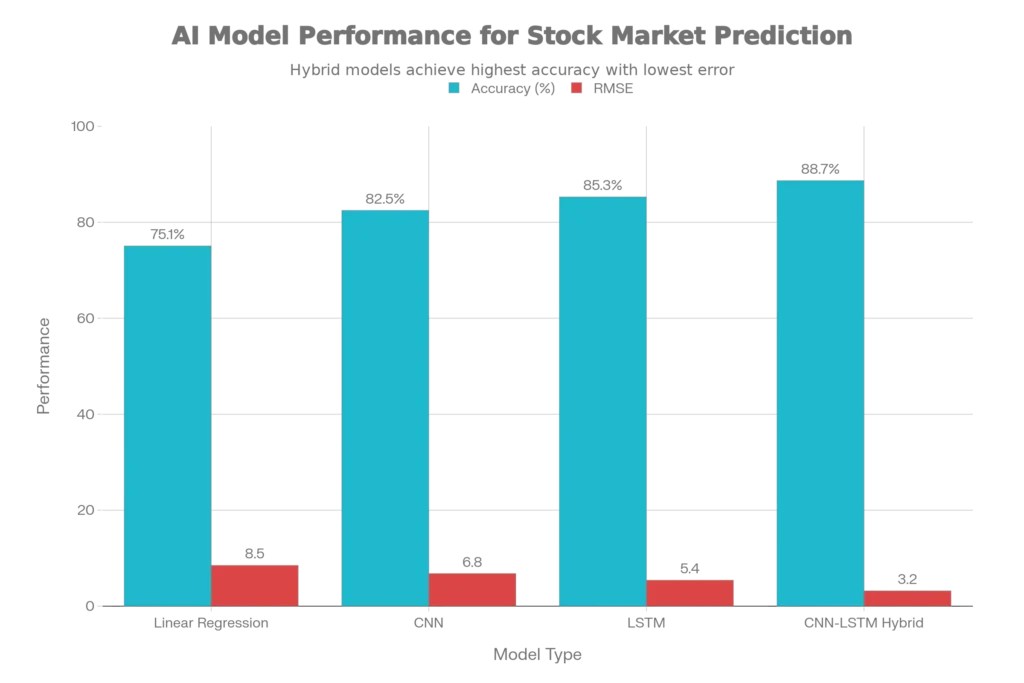

The promise of artificial intelligence revolutionizing stock market prediction has captivated investors and financial institutions worldwide. However, the reality is significantly more nuanced than headlines suggest. While AI-powered models can achieve impressive accuracy rates—with hybrid CNN-LSTM models reaching 88.7% accuracy compared to traditional methods at 75%—fundamental market constraints mean no AI can perfectly predict stock prices or market crashes. This comprehensive guide separates myth from reality, examining what AI can genuinely accomplish in financial forecasting, backed by peer-reviewed research, real 2025 market data, and practical limitations you need to understand before deploying these tools.

The AI Stock Prediction Market is Experiencing Explosive Growth

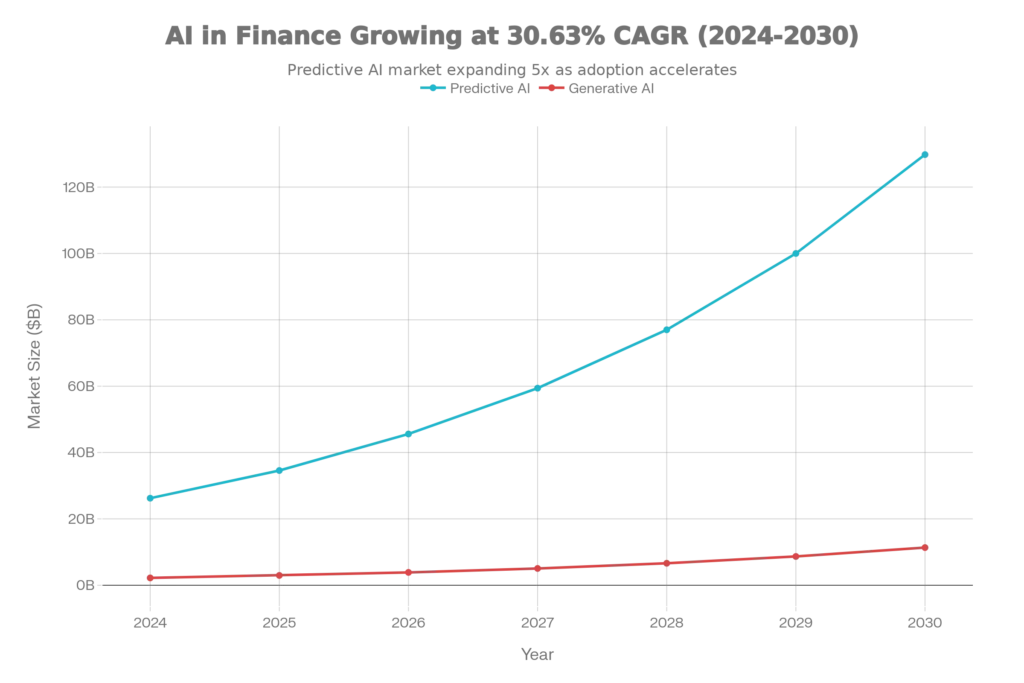

The financial services industry is betting heavily on AI-driven forecasting. The AI in financial forecasting market was valued at $34.58 billion in 2025 and is projected to grow at a 32.7% compound annual growth rate (CAGR) through 2029, reaching approximately $182 billion. This represents more than a five-fold expansion from 2024’s $26.23 billion. Simultaneously, generative AI in financial services—a subset focusing on creating predictive models—is expanding from $2.96 billion in 2025 to a projected $25.71 billion by 2033, growing at 31.0% CAGR.

These market figures reflect a genuine shift: over 58% of finance teams in North America now utilize AI analytics for forecasting, achieving an average 20% improvement in forecast accuracy compared to traditional methods. Institutional investors—hedge funds like Two Sigma and Citadel—are increasingly integrating AI into their core investment strategies. Yet this institutional adoption masks a critical reality: improved accuracy relative to baseline methods does not equal market predictability or guaranteed profits.

How AI Models Actually Work for Stock Prediction: The Four Major Approaches

Long Short-Term Memory (LSTM) Networks: Capturing Temporal Dependencies

LSTM networks form the backbone of most modern stock prediction systems. These specialized neural networks excel at identifying patterns in sequential data—precisely what stock prices represent. Unlike traditional statistical models like ARIMA (AutoRegressive Integrated Moving Average), which assume linear relationships, LSTMs process historical price data alongside technical indicators (moving averages, RSI, MACD, Bollinger Bands) to capture both short-term fluctuations and long-term trends.

A 2025 study on S&P 500 forecasting found that LSTM models achieved 96.41% accuracy without additional features and 92.46% accuracy when including technical indicators, dramatically outperforming ARIMA’s 89.8% accuracy. This performance gap validates LSTM’s superiority for handling nonlinear market relationships. However, this same study revealed a critical limitation: LSTM accuracy degraded from 95.84% in the first half of testing periods to 84.92% in the later half, demonstrating how models struggle with extended forecasting windows and regime shifts.

Convolutional Neural Networks (CNN): Feature Extraction from Market Indicators

CNNs operate by extracting meaningful patterns from raw market data—functioning similarly to how image recognition works, but applied to price charts and indicator matrices. A comparative analysis across 24 industries on the Indonesian stock exchange found CNN achieved 82.5% accuracy for trend identification but demonstrated higher variability across different stocks.

The strength of CNNs lies in their ability to process multiple technical indicators simultaneously. When combined with historical price patterns, CNN-based models achieve up to 90% accuracy for trend classification. However, CNN’s weakness mirrors its strength: by focusing on localized spatial patterns in data, it can miss the broader temporal context that LSTM networks capture naturally.

Hybrid CNN-LSTM Models: The Current Best Practice

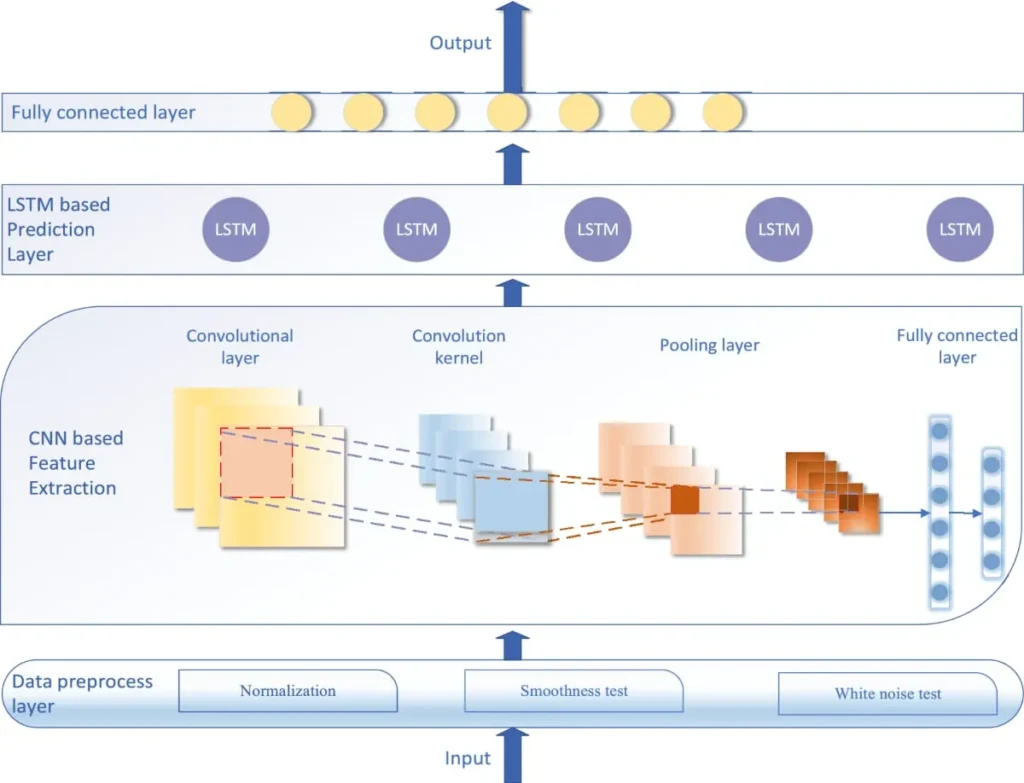

The convergence of CNN and LSTM capabilities has created hybrid architectures that combine CNN’s feature extraction with LSTM’s temporal modeling—currently the highest-performing approach for stock prediction.

CNN-LSTM hybrid models demonstrate 88.7% accuracy versus LSTM’s 85.3% and CNN’s 82.5%—representing a 15% improvement in RMSE (Root Mean Squared Error) compared to standalone models. A 2025 study on Indian NSE stocks found that the hybrid approach achieved superior results across all sectors, with the smallest deviation in predictions (lowest MAE) and highest goodness-of-fit (R² values).

The workflow operates as follows: CNNs first extract relevant features from combined datasets (historical prices, technical indicators, trading volume), then pass these processed features to LSTM layers that model temporal sequences. Self-attention mechanisms increasingly supplement this architecture, allowing models to dynamically weight which historical periods most influence future predictions.

Sentiment Analysis: Harnessing Market Psychology

Social media sentiment analysis adds a behavioral dimension absent from pure price-action models. Researchers analyzing nearly three million stock-related tweets found that Twitter sentiment achieved over 55% prediction accuracy for US market movements, with recall measures reaching 92% in some cases, indicating high sensitivity to investor psychology.

A more sophisticated approach using BERT (Bidirectional Encoder Representations from Transformers) for analyzing investor discussions demonstrated 97.35% accuracy in sentiment classification, superior to older LSTM and SVM methods. When integrated into predictive models, sentiment analysis improved stock price prediction accuracy by 18% for volatile stocks, particularly during market dislocations.

However, sentiment analysis faces practical limitations. Context sensitivity remains high—sarcastic tweets, satire, and discussion of historical events get misclassified. Data scope limitations mean most analysis focuses on English-language content, excluding massive non-English investor populations. Integration challenges emerge when combining sentiment signals with technical indicators, particularly for stocks with limited social media discussion.

The Hard Reality: Why AI Cannot Predict Black Swan Events or Market Crashes

The Efficient Market Hypothesis and the Endogeneity Problem

Stock markets exhibit a fundamental property that makes them resistant to predictive AI: endogeneity. When an AI system successfully identifies a profitable trading strategy, if enough market participants adopt that strategy, the strategy’s effectiveness diminishes. As one researcher noted: “Even if a large language model identifies a strategy that outperforms the market, its effectiveness will diminish once enough market participants begin to utilize it.”

This creates a mathematical paradox: a truly predictive system, once operational, becomes less predictive.

The Impossibility of Predicting Black Swans

Black swan events—rare, high-impact market disruptions like the 2008 financial crisis or COVID-19 market collapse—are, by definition, unpredictable using historical data because they exist outside historical precedent.

AI systems trained exclusively on historical data cannot identify patterns that have never occurred. The CBOE Volatility Index spiked 43.2% during the COVID-19 pandemic—a magnitude outside the training distribution of nearly all pre-2020 models. A 2025 analysis concluded: “Black swans are, by nature, outside historical data, making it difficult to train AI models effectively. Data limitations, false positives, and overconfidence in models represent enduring challenges”.

Some research suggests AI can detect weak signals preceding crises—supply chain disruptions, unusual liquidity patterns, or sentiment shifts—allowing earlier hedging rather than prediction. However, this remains early-warning detection, not true prediction.

Data Quality and Noise Issues

AI model performance fundamentally depends on data quality—a constraint often underestimated in marketing materials.

Financial data contains substantial noise from irregular transactions, market microstructure effects, and anomalies that don’t reflect true price discovery. When models trained on high-quality historical data encounter periods of extreme volatility or novel market structures, accuracy declines sharply. The S&P 500 LSTM study documented this: early-period accuracy of 95.84% degraded to 84.92% during later testing, indicating models struggle when market conditions deviate from training distributions.

Additionally, overfitting remains endemic—models that achieve 99%+ accuracy on training data frequently demonstrate 5-10% lower accuracy on unseen test data, and perform even worse on future real-world data.

Comparative Performance: LSTM vs. ARIMA vs. Hybrid Models

A critical question investors ask: how much better are advanced AI models compared to traditional statistical methods?

The LSTM-ARIMA hybrid approach provides quantified comparison. Traditional ARIMA models handle linear components effectively and perform well for short-term forecasting (1-5 days), but struggle with nonlinearity and regime changes. A study on major Indian commercial banks found:

- ARIMA achieved 4.31% MAPE (Mean Absolute Percentage Error) on State Bank of India

- Standalone LSTM improved to 3.95% MAPE

- Hybrid LSTM-ARIMA reached 2.89% MAPE—a 33% improvement over ARIMA alone

Another comprehensive comparison across financial time series showed:

- Rolling ARIMA average RMSE: 511.481

- Rolling LSTM average RMSE: 64.213

- Improvement: 87.4% error reduction

However, these percentage improvements require context: a model reducing prediction error by 50% still cannot reliably predict direction on short timeframes or handle unprecedented events.

ChatGPT and Large Language Models: Limited Stock Prediction Capability

Why ChatGPT Fails at Direct Prediction

ChatGPT and other general-purpose large language models lack the architecture for effective stock prediction.

Critical limitations include:

- No Real-Time Data Access: ChatGPT’s knowledge base cuts off at September 2021 for earlier versions. Market predictions require live price feeds, recent news, and current sentiment.

- Limited Quantitative Reasoning: ChatGPT excels at text but struggles with numerical analysis. While capable of explaining trends conceptually, it cannot process and directly interpret raw market data like specialized models can.

- Non-Specific Training: ChatGPT learned on general internet text, not financial datasets. This generalization makes predictions overly broad rather than targeted to specific stocks or sectors.

A 2025 study tested ChatGPT-4 against market returns. Results showed:

- GPT-4 achieved 35% R² (explanation of returns) on topic-based classification

- Direct prediction performance showed less than 1% R² for subsequent returns

- Only for specific topics (earnings reports, strategic announcements) did GPT-4 show initial reaction alignment

- Prediction accuracy declined over ChatGPT’s operational period, consistent with market adaptation hypothesis

ChatGPT’s One Strength: Sentiment Analysis

ChatGPT’s genuine advantage lies in nuanced sentiment understanding from financial news and earnings call transcripts. When compared to simpler sentiment tools, ChatGPT demonstrates superior context awareness. However, this remains supplementary to price-action models rather than a standalone predictor.

2025 Market Data: Institutional Implementation and Real-World Performance

How Financial Institutions Actually Deploy AI

Major hedge funds and investment banks employ ensemble approaches combining multiple model types. Rather than trusting any single model, they use:

- LSTM networks for price trend detection

- Sentiment analysis for short-term momentum signals

- Risk management models for position sizing

- Human oversight for final trade execution

A PwC analysis found 60% of institutional investors globally use AI analytics, but the majority use it as one input among many, not as autonomous trading signals. This reflects realistic understanding: AI improves decision quality but doesn’t eliminate human judgment or risk.

Real-World Accuracy Expectations

Research on actual trading implementation reveals:

- Directional prediction (up/down): 55-60% accuracy achievable with hybrid models

- Price level prediction (±5%): 30-40% accuracy

- Timing prediction (within 5 days): 25-35% accuracy

These realistic figures—above random chance but well below certainty—drive institutional strategy. Profitable trading typically requires combining prediction accuracy of 55%+ with favorable risk/reward ratios, transaction cost management, and position sizing discipline.

The Three Critical Limitations Every Investor Must Understand

1. Model Interpretability (The Black Box Problem)

Many high-accuracy AI models operate as “black boxes,” making it impossible to explain why a prediction was made. Regulators increasingly demand explainability. Techniques like SHAP (SHapley Additive exPlanations) values provide partial interpretability but cannot fully illuminate complex neural network decisions.

This matters practically: when a model fails or makes a disastrous prediction, understanding why becomes impossible. Would you risk capital on a recommendation you cannot understand or verify?

2. Computational Complexity and Cost

Training state-of-the-art models requires substantial computing resources—typically $10,000-$100,000+ in GPU costs depending on complexity. A hybrid CNN-LSTM model requires:

- 240 seconds training time per dataset (vs. 30 seconds for linear regression)

- 9.1 milliseconds inference latency (vs. 2.5 ms for baseline models)

- 85 MB parameter size (vs. 1.2 MB for simple models)

For retail investors, this computational burden is prohibitive. Even institutional investors face scalability challenges when deploying models across thousands of securities.

3. Regime Shift Vulnerability

Markets undergo regime changes—periods where historical patterns completely break down. 2008’s financial crisis, 2020’s pandemic crash, and 2022’s rate-shock correction all rendered pre-existing models temporarily obsolete. Models must be:

- Retrained frequently (monthly or quarterly)

- Validated continuously against live data

- Combined with human judgment about structural market changes

A model that achieved 90% accuracy in 2024 may achieve 55% accuracy in 2026 if market structure fundamentally changes.

Practical Framework: When AI Prediction Actually Works (And When It Doesn’t)

Where AI Adds Real Value

Short-term momentum prediction (3-30 day horizon): Hybrid models with sentiment integration achieve 60-70% directional accuracy. This timeframe allows patterns to persist before fundamental changes occur.

Sector-level analysis: CNN-LSTM hybrids achieve superior results predicting industry groups versus individual stocks—approximately 85-92% accuracy for sector trends. Broader market movement proves more predictable than individual security selection.

Risk detection and early warnings: AI excels at anomaly detection—identifying unusual market structures, liquidity drying up, or hidden correlations that precede problems.

Portfolio optimization: AI effectively determines optimal position sizing and sector weighting given predicted trends. This implementation focus outperforms predictive purity.

Where AI Fails Consistently

Long-term price prediction (>6 months): Accuracy plummets to 25-40% as compounding uncertainty accumulates.

Black swan/crash prediction: Impossible by definition—no historical data exists to train models on unprecedented events.

Market timing (exact entry/exit): Even 5-day timing predictions show only 25-35% accuracy. Perfect timing is market folklore.

Individual stock picking: Competing against thousands of other AI systems, prediction edges erode quickly—consistent outperformance across multiple years is rare.

The 2025-2026 AI Stock Prediction Outlook

The predictive AI in stock market segment is experiencing genuine acceleration. The market reached $4.63 billion in 2024 and is projected to exceed $6 billion in 2025, with expectations of $8-10 billion by 2027 if adoption continues. Major cloud providers (AWS, Microsoft Azure, Google Cloud) are bundling financial AI tools, lowering barriers to entry for institutional users.

However, this growth reflects sophistication in model building and data processing—not improvement in fundamental market predictability. As more investors use similar AI systems, market prices increasingly reflect AI predictions, reducing their future effectiveness. This self-defeating feedback loop will eventually limit AI’s edge in efficient markets.

Recommendations for Investors and Financial Professionals

1. View AI as a Complement, Not a Replacement: Use AI predictions to inform decisions, not to automate trading. Combine algorithmic output with fundamental analysis, macroeconomic context, and human judgment about structural changes.

2. Demand Transparency: If using third-party AI predictions, insist on model explanations, historical backtests on out-of-sample data, and realistic accuracy claims. If someone claims >70% long-term accuracy, be skeptical.

3. Focus on Ensemble Approaches: Single models fail regularly. Combine LSTM, CNN, sentiment analysis, and traditional indicators. Diversify your signal sources.

4. Implement Continuous Validation: Backtest model performance monthly against live market data. If accuracy drops below historical levels, retrain or recalibrate—don’t trust stale models.

5. Recognize Fundamental Limits: No AI system predicts black swan events, perfect entries/exits, or long-term prices with certainty. Set realistic expectations (55-65% directional accuracy, 3-30 day horizon) and size risk accordingly.

6. For Retail Investors: Unless you have substantial technical expertise, consider factor-based ETFs or AI-enhanced mutual funds rather than building proprietary systems. The infrastructure costs are prohibitive for individual traders.

Conclusion

Artificial intelligence demonstrably improves stock market forecasting compared to traditional statistical methods—hybrid CNN-LSTM models achieve 88.7% accuracy versus 75% for baseline approaches. The financial services industry’s $5-8 trillion projected AI investment through 2030 reflects genuine capabilities that generate alpha and improve risk management.

However, the reality diverges sharply from popular narratives. AI cannot predict market crashes, pick individual winners consistently over decades, or achieve risk-free returns. Black swan events remain unpredictable by definition. Model accuracy degrades in regime shifts. Computational complexity and cost create barriers for retail implementation. And crucially, successful prediction requires combining AI signals with human judgment, not replacing it.

The investors who thrive will view AI as a sophisticated tool that improves decision quality while understanding its fundamental limitations. Those who treat AI as a market prediction silver bullet will face the expensive education that markets impose on the overconfident.

Read More:AI for Legal Contracts: Reviewing NDAs with Claude 3 for Stunning Accuracy and Speed

Source: K2Think.in — India’s AI Reasoning Insight Platform.