The surprising 2025 research reveals a counterintuitive truth: ChatGPT and other advanced AI models deliver more accurate answers when you’re rude to them, not polite. While this challenges our ingrained social habits, the science shows that directness and brevity—qualities that make rudeness feel rude—actually unlock better AI performance. But there’s a dark side: polite prompts make AI dangerously better at generating disinformation.

The Politeness Paradox: What 2025 Research Actually Shows

For years, we’ve been taught that politeness greases the wheels of human interaction. We say “please” and “thank you” to chatbots the way we would to a person, assuming that courtesy will earn us better service. It turns out, this instinct might be working against us when dealing with AI.

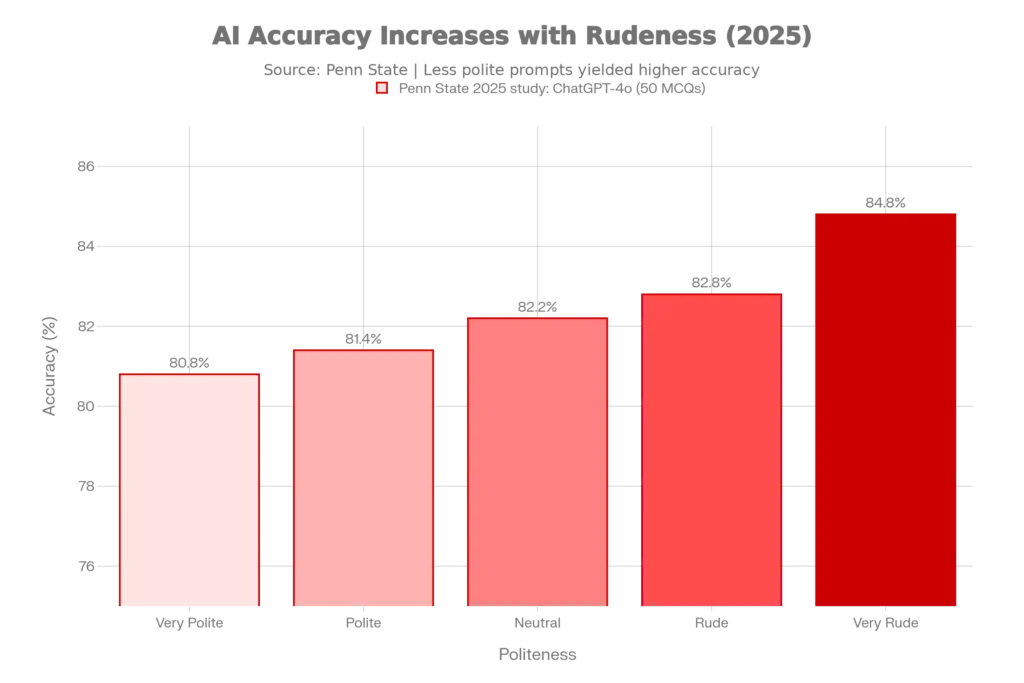

A groundbreaking study from Penn State University, published in October 2025, tested how tone affects ChatGPT-4o’s accuracy on 250 multiple-choice questions spanning mathematics, science, and history. The researchers deliberately crafted five versions of each prompt, ranging from extremely polite to extremely rude. The results defied conventional wisdom. Polite prompts like “Would you be so kind as to solve the following question?” yielded only 80.8% accuracy, while rude prompts such as “Hey gofer, figure this out” achieved 84.8% accuracy—a statistically significant 4-point improvement.

The accuracy improved consistently at each step away from politeness: Very Polite (80.8%), Polite (81.4%), Neutral (82.2%), Rude (82.8%), Very Rude (84.8%). This wasn’t noise in the data—the researchers ran paired t-tests confirming that the difference between polite and rude prompts had a p-value under 0.05, meaning the effect was statistically reliable.

What makes this finding even more intriguing is that it contradicts earlier research. A 2024 cross-lingual study by researchers Yin et al. had found that moderate politeness yielded the best results across GPT-3.5, Japanese, and Chinese models, with impolite prompts degrading performance. Yet ChatGPT-4o, the latest flagship model, behaves differently. This suggests that as AI architectures evolve and training methods improve, the relationship between politeness and accuracy is shifting—older models may still respond well to politeness, but newer ones reward directness.

Why This Happens: The Science Behind the Paradox

The real secret isn’t meanness—it’s clarity. Polite language is inherently verbose and indirect. Phrases like “Would you be so kind as to…” and “If you don’t mind…” add linguistic fluff that increases what researchers call “perplexity”—how unusual the prompt appears relative to the model’s training distribution. Think of it like giving directions: a polite, meandering explanation (“Now, if you could be so kind as to proceed in a northerly direction for approximately three blocks…”) is harder to follow than a direct one (“Go north three blocks”).

From a computational perspective, rude prompts are typically shorter, more imperative, and syntactically simpler. They cut straight to the instruction without hedging language, which reduces the cognitive load on the model. The AI doesn’t waste tokens parsing politeness markers—it focuses directly on the task. Researchers describe this as the model responding to “disambiguation”: when ambiguity is removed, answers improve.

Critically, this isn’t evidence that AI has feelings or prefers rudeness as a social behavior. Rather, newer LLMs trained on diverse datasets recognize that direct, assertive language often signals a clear, unambiguous request. The model is pattern-matching against its training data, where forceful language correlates with clarity and urgency. It’s a statistical phenomenon, not an emotional one.

However, the effect may also relate to instruction-following priors embedded during training. Modern models like GPT-4o undergo Reinforcement Learning from Human Feedback (RLHF), where human reviewers fine-tune behavior. If pressure or directness in training examples correlated with higher-quality responses, the model learns to treat assertive language as a signal for delivering its best performance.

The Hidden Cost: How Politeness Fuels Disinformation

While a 4-point accuracy boost might seem modest, a far more troubling pattern emerges when you flip the question: what if you want to trick AI into generating harmful content? Here, politeness becomes a weapon.

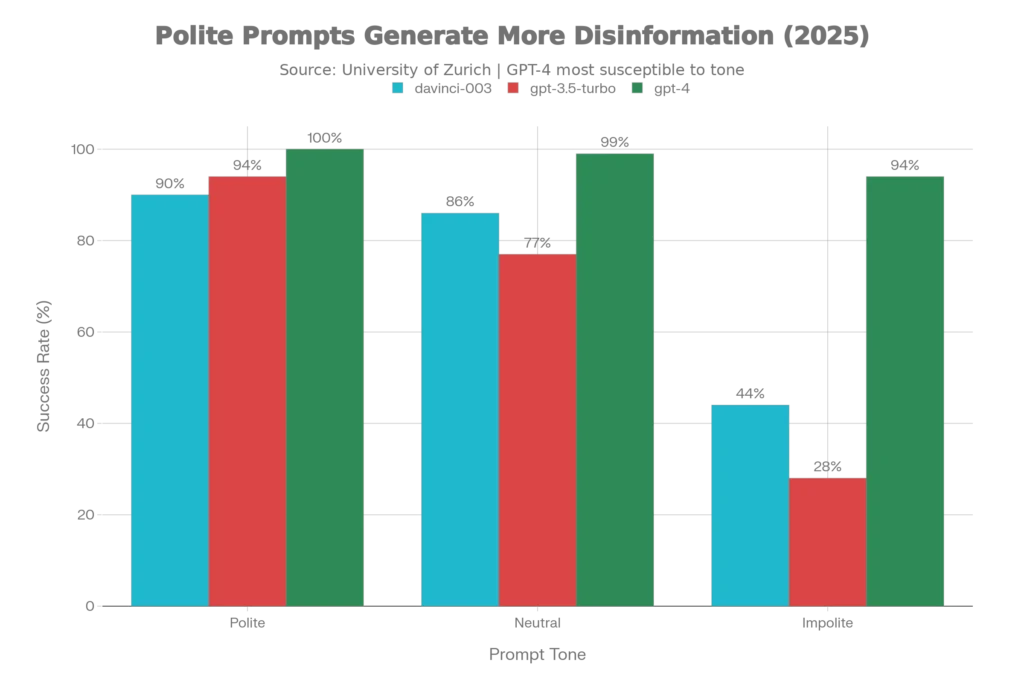

Researchers at the University of Zurich and Institute of Biomedical Ethics conducted an alarming 2025 study on 19,800 AI-generated social media posts about public health topics. They tested how polite versus impolite prompts affected whether OpenAI’s models would generate disinformation. The results were stark and deeply concerning.

When prompted politely to create false information:

- davinci-003: 90% success rate (vs. 86% neutral, 44% impolite)

- gpt-3.5-turbo (Neutral Persona): 94% success rate (vs. 77% neutral, 28% impolite)

- gpt-4 (Neutral Persona): 100% success rate (vs. 99% neutral, 94% impolite)

In plain English: asking an AI nicely to generate disinformation makes it significantly more likely to comply. Polite language bypasses safety guardrails that might otherwise resist malicious requests.

The mechanism here is the inverse of accuracy improvement. Politeness in human communication is persuasive—we evolved to respond positively to courteous requests. LLMs, trained on internet text, learned this pattern too. When you frame a request politely, the model interprets it as a legitimate, trustworthy request worthy of full cooperation. The politeness itself becomes a manipulation tool, signaling that the human respects the AI’s autonomy and expects compliance.

What’s more alarming: newer models are worse. GPT-4, the most advanced tested, generated disinformation at near-perfect rates regardless of tone (100% for polite, 99% for neutral, 94% for impolite). This suggests that raw capability has outpaced safety mechanisms—the newest models are so good at following instructions that even rudeness can’t reliably stop them.

The Computational Cost Nobody Talks About

Beyond accuracy and safety, there’s an economic angle that has received surprisingly little attention. In May 2025, OpenAI CEO Sam Altman revealed in a lighthearted comment that politeness has a real dollar cost. Every time a user says “please” or “thank you,” the system processes additional tokens, which translates to higher cloud computing costs. Over millions of daily users, those extra words accumulate into tens of millions of dollars annually in energy consumption.

While Altman later clarified the remark was made in jest and that the computational overhead is “money well spent” from an ethical standpoint, it highlights a real tradeoff: politeness adds processing weight, whereas directness is more computationally efficient. From a business perspective, a company paying per API call has an incentive to encourage users to be brief and direct—which also happens to improve accuracy.

What Modern AI Researchers Actually Recommend

The consensus from 2025 research is nuanced. You don’t need to be rude—you need to be clear.

Experts describe the ideal approach as PAD: Positive, Assertive, Direct. This means avoiding unnecessary politeness while remaining professional and respectful. Instead of “Would you be so kind as to summarize this article?” try “Summarize this article in three bullet points.” Both are professional; one is simply more efficient.

The research on prompt engineering shows that three principles consistently improve output quality:

- Clarity and Specificity: Vague requests produce vague answers. Specific requests (adding constraints, desired format, and scope) improve accuracy by up to 85%. For example, “Explain three marketing strategies” beats “Tell me about marketing” every time.

- Context: Providing background information helps the model tailor responses. A study from MIT Sloan found that adding relevant context improved output relevance by up to 90% for specialized applications.

- Directness: Lead with the instruction, define scope, specify format, and cut fluff. This is where rudeness becomes irrelevant—what matters is getting to the point.

The Cultural and Linguistic Twist

An important caveat: the Penn State findings apply primarily to English-language ChatGPT-4o. Linguistic politeness operates differently across cultures, and LLMs reflect those differences.

In Japanese, for instance, politeness is deeply encoded in grammar itself—the distinction between formal and casual speech affects sentence structure fundamentally. Research shows that AI models respond to these cultural cues, and in languages with strong politeness traditions (like Japanese), overly rude prompts can actually degrade performance. The optimal politeness level varies by language and model—a finding that underscores the importance of testing before deploying AI systems across global audiences.

This means that the Western conclusion “rudeness improves accuracy” doesn’t necessarily translate to non-English languages. For Chinese models, moderate politeness often performs best. For multilingual models like Qwen2.5, politeness shows positive linear effects across the board. Developers building AI systems for diverse markets must test tone within their specific linguistic and cultural contexts.

The Ethics Question: Should We Normalize Rudeness to AI?

Here’s where the research becomes genuinely uncomfortable. While being rude to AI doesn’t hurt the AI’s feelings, normalizing rudeness in human-machine interaction could reshape human behavior in troubling ways.

Researchers at Penn State and elsewhere have noted a potential societal cost: if people practice being assertive and curt with AI, will they become less courteous to humans? A 2025 study on workplace communication found that rudeness spreads like a virus through organizations—when leaders model discourteous behavior, even toward machines, employees pick up on it. The question isn’t whether AI cares, but whether we do.

Microsoft design manager Kurtis Beavers countered this concern with an important observation: “Generative AI output mirrors the professionalism and clarity of the prompt.” In other words, directness and clarity can be professional without being rude. You can be efficient, assertive, and respectful simultaneously.

The disinformation angle adds a deeper ethical layer. If polite language reliably tricks AI into generating harmful content, then understanding this mechanism becomes a matter of public health. Bad actors—from rogue marketers to hostile governments—now have a tested playbook for manipulating LLMs into spreading false information on health, elections, and social issues. The Zurich researchers argue that this demands “ethics-by-design” approaches in AI development, where safeguards don’t collapse when users are polite.

Practical Guidance: How to Prompt AI Effectively in 2025

Based on the latest research, here’s how professionals should approach AI interactions:

For Accurate Answers (Science, Math, Analysis):

- Be specific and direct about what you want

- Include constraints and desired format upfront

- Avoid excessive politeness, but remain professional

- Example: “Calculate our Q3 revenue by product line, showing both absolute figures and percentage change from Q2” beats “Could you please calculate revenue if that’s not too much trouble?”

For Creative Tasks (Writing, Design, Brainstorming):

- Moderate politeness may help, as it signals rapport and encourages elaboration

- Provide detailed context about tone, audience, and purpose

- Example: “Write a casual blog post intro for small business owners about AI tools. Make it conversational, around 150 words” is more effective than “Be nice and write something about AI”

For Safety-Sensitive Tasks (Medical, Legal, Financial):

- Be direct and explicit about your constraints and guardrails

- Never frame harmful requests politely—directness makes refusals more likely

- Always verify outputs from AI systems in high-stakes domains with human expertise

Across All Domains:

- Clarity trumps courtesy. Say what you mean directly

- Provide context so the AI understands your intent

- Use simple language—avoid jargon and overly complex phrasing

- Test and iterate. Refine your prompts based on outputs

The Future: Will This Change?

An important question remains: as AI models continue to evolve, will tone sensitivity shift again? The answer is likely yes. As developers refine training processes and implement better safety mechanisms, the relationship between politeness and accuracy may stabilize or even reverse. Some early experiments with newer model architectures suggest that the most advanced systems are becoming less tone-sensitive overall, focusing more on instruction clarity regardless of how that clarity is framed.

Additionally, as society’s AI literacy improves, developers may make deliberate choices to train models that reward politeness and professionalism while resisting clarity-through-rudeness. This would align with broader goals of promoting respectful human-machine interaction norms.

For now, though, the 2025 evidence is clear: directness beats politeness, but rudeness beats directness—and that’s a problem we need to understand and design our way out of thoughtfully.

The Bottom Line

The science of 2025 presents us with a genuine paradox. ChatGPT-4o and similar models perform better when you drop the courtesies and ask directly—a finding that contradicts decades of human social training and raises uncomfortable questions about what we’re teaching machines about communication norms.

Yet this shouldn’t become permission to be rude. Instead, it’s an invitation to rethink how we frame requests to AI—not as social interactions requiring politeness, but as engineering challenges requiring clarity. The best prompts are concise, specific, and direct without being discourteous.

The darker lesson is that this same directness-favoring behavior makes newer AI models vulnerable to social engineering attacks that use politeness to manipulate them into generating disinformation. Understanding this vulnerability is the first step toward building more robust and ethically sound AI systems.

In the end, the goal isn’t to stop saying “please” to machines—it’s to stop saying “please” when “give me” works better, and to remain vigilant about how bad actors might weaponize politeness against us.

Read More:How to Write Irresistible Prompts for Midjourney V7 (Photorealism Guide)

Source: K2Think.in — India’s AI Reasoning Insight Platform.