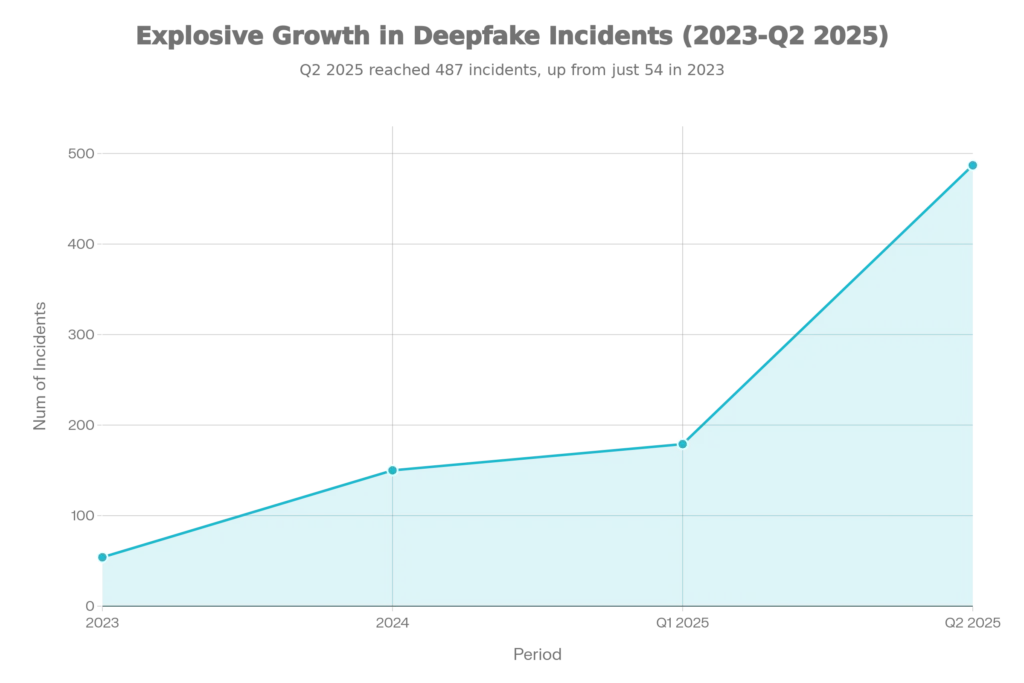

As artificial intelligence advances at an unprecedented pace, the threat of deepfake news videos has become one of the most pressing challenges to digital authenticity and public trust. Deepfakes—videos created or manipulated using advanced AI technology—can convincingly replicate real people’s faces, voices, and movements, making it increasingly difficult to distinguish fiction from reality. The statistics are alarming: in Q2 2025 alone, 487 deepfake incidents were reported, representing a 312% year-over-year increase, and deepfake-related financial losses have exceeded $1.56 billion since 2019. This article provides you with practical, evidence-based methods to identify AI-generated news videos and protect yourself from digital deception.

The Deepfake Crisis: A Growing Threat to Information Integrity

Deepfake incidents have exploded at an alarming rate. In 2023, there were only 54 documented deepfake incidents. By 2024, this jumped to 150 incidents—a 257% increase. However, the real surge occurred in 2025: the first quarter alone saw 179 incidents, surpassing the entire year of 2024 by 19%. The second quarter continued this devastating trend with 487 discrete deepfake incidents recorded, demonstrating that the number of deepfake attacks now doubles roughly every month.

The financial consequences have been equally severe. In the first half of 2025, deepfake fraud caused approximately $410 million in losses, bringing the cumulative total since 2019 to nearly $900 million. More recent data indicates that deepfake-related losses have already surpassed $1.56 billion as of late 2025, with over $1 billion occurring in 2025 alone. Q1 2025 alone saw $200 million in deepfake-driven fraud losses.

The breakdown of victims is sobering: individuals account for 60% of losses (approximately $541 million), while businesses lose 40% ($356 million). The most common deepfake fraud activity involves impersonating famous people to promote fraudulent investments, resulting in $401 million in losses. The second most prevalent method is impersonating company executives to trigger fraudulent wire transfers, accounting for $217 million in losses.

What makes this crisis particularly concerning is how rapidly the technology has democratized. Creating a sophisticated deepfake now takes as little as three minutes with a computer, and the tools cost only a few dollars to operate. Generative AI models like Sora (OpenAI), Runway Gen-4, Kling AI, and others have made deepfake creation accessible to anyone with basic technical knowledge.

Real-World Examples: Deepfakes in Action

Recent incidents demonstrate just how convincing and damaging these videos can be. In October 2025, during Ireland’s presidential election, a Facebook account impersonating RTE News (Ireland’s state broadcaster) posted a sophisticated deepfake video claiming the presidential election had been cancelled. The video featured realistic facial expressions, perfectly synced audio, correct spelling, authentic RTE newsroom branding, and even included a ticker with constituency results. The video was so convincing that it fooled many viewers before Meta finally removed it—but only after the account was escalated by election monitoring organizations. Notably, Meta’s own automated tools failed to detect the manipulated content despite its explicitly political nature.

India’s 2025 elections also witnessed a surge in deepfake disinformation. Two viral deepfake videos showed Bollywood actors Ranveer Singh and Aamir Khan supposedly campaigning for the opposition Congress party. Both actors filed police complaints, claiming their likenesses were used without consent. Meanwhile, Prime Minister Narendra Modi expressed concerns about deepfakes being used to distort speeches by senior BJP leaders, including his own remarks.

These aren’t isolated incidents. A 2024 report by cybersecurity firm Recorded Future documented 82 pieces of AI-generated deepfake content targeting public figures across 38 countries in a single year, with a disproportionate number focused on elections.

How to Spot AI-Generated News Videos: The Essential Visual Clues

While deepfakes continue to improve, they still leave detectable traces. Here are the most reliable visual indicators to identify manipulated video content.

1. Unnatural Eye Movements and Blinking Patterns

The Science: Human eye blinking is an involuntary, natural action that occurs 15-20 times per minute, with each blink lasting approximately 0.1 to 0.4 seconds. Blinking patterns vary greatly based on genetics, muscle tone, cognitive workload, age, emotional state, and alertness. Deepfake creators struggle to replicate this natural randomness because the factors influencing blink frequency are highly individualized and complex.

What to Watch For:

- Abnormal blinking frequency (either too frequent or suspiciously absent)

- Blinks that last unusually long or appear jerky

- Eye movements that don’t follow natural patterns—deepfakes often exhibit robotic or off-center stares

- Missing the natural micro-expressions that accompany genuine blinking

- Eyes that remain perfectly centered without the natural gaze movement of real conversation

Research Finding: A study using Long-term Recurrent CNN (LRCN)—a hybrid deep learning architecture combining recurrent neural networks with convolutional neural networks—achieved 85% accuracy in detecting deepfakes based solely on eye-blinking pattern analysis. This demonstrates that eye behavior is one of the most reliable detection markers.

2. Facial Inconsistencies and Landmark Distortions

The Technical Background: Deepfake creation relies heavily on facial landmark extraction—the identification of key facial features like eye corners, nose tip, mouth corners, and jaw outline. These landmarks are aligned to a standard face configuration before manipulation occurs. However, research shows that coordinated motion patterns among facial landmarks vary significantly between real and fake videos.

What to Watch For:

- Asymmetrical facial features (one eye appearing slightly different from the other, uneven eyebrows)

- Inconsistencies in facial symmetry across different frames

- Wrinkles or skin texture that appears artificially smooth or unrealistic

- Teeth that appear unusually perfect or lack natural wear and variation

- Skin tones that don’t match between the face and neck/ears

- Hair that appears too uniform without natural flyaways or imperfections

- Subtle shifts in facial proportions between frames

Landmark Detection Science: Advanced detection models analyze the relative positions and movements of facial landmarks across video frames. Real faces maintain consistent spatial relationships between landmarks, while deepfakes created through generative methods show detectable deviations. Research using facial landmark analysis achieved accuracy rates exceeding 89% in detecting manipulated images.

3. Audio-Visual Synchronization Mismatches (Lip-Sync Problems)

The Challenge: Creating perfect lip synchronization is one of the most difficult aspects of deepfake creation. A 2024 research paper demonstrated that even state-of-the-art lip-sync synthesis engines fail to maintain perfect correspondence between spoken words and mouth movements.

What to Watch For:

- Mouth movements that don’t align perfectly with spoken words

- Lag between when lips move and when you hear the sound

- Unnatural pauses or hesitations in speech that don’t match the video

- Audio that seems polished but slightly out of sync

- Mismatched tone or vocal inflections between audio and visual mouth movements

- Words that seem to end slightly before the lips stop moving (or vice versa)

Detection Accuracy: Researchers at CVPR 2024 developed a technique comparing audio-to-text transcription with video-to-text (lip reading) transcription. This method achieved 98% detection accuracy for lip-sync deepfakes while misidentifying only 0.5% of authentic videos as fake. The technique works because even if audio and video appear synchronized to the human eye, the semantic content often differs when analyzed separately by AI systems.

4. Lighting and Shadow Inconsistencies

Why This Matters: Realistic lighting requires understanding the physics of light reflection, absorption, and shadow casting. While modern generative AI has improved dramatically, maintaining consistent lighting across a full video remains challenging.

What to Watch For:

- Shadows that don’t match the direction of light sources

- Uneven lighting on the face—one side brighter than the other without clear reason

- Shadows that appear in unnatural places or have soft edges where they should be sharp (or vice versa)

- Skin reflections that don’t correspond to visible light sources

- Inconsistent skin tone coloration between frames

- Odd coloration or color shifts from one frame to the next

- Lighting that doesn’t reflect the apparent environment (bright face in a dark room, or vice versa)

- Hair and facial features with unrealistic reflectance or specularity

Technical Basis: Frequency domain analysis using Fourier Transform can detect unnatural frequency patterns that result from generative upsampling in deepfakes.

5. Unnatural Body Movement and Gesture Patterns

The Problem: Natural human movement involves coordinated motion across multiple body parts with subtle variations. Deepfakes often fail to replicate this complexity convincingly.

What to Watch For:

- Jerky or stiff head movements that lack natural fluidity

- Uncoordinated gestures (head and body moving out of sync)

- Repeated or loop-like movements, especially of hands or arms

- Posture changes that appear unnatural or disjointed

- Micro-movements that appear disconnected (e.g., shoulder shrugs that don’t coordinate with arm movements)

- Movements that seem to repeat identically across multiple instances

- Lack of natural fidgeting or subtle adjustments real people make when speaking

6. Background Anomalies and Spatial Inconsistencies

What to Watch For:

- Blurring around the edges of the speaker’s face or body

- Background elements that appear distorted or pixelated

- Unnatural blending between the foreground (face/body) and background

- Objects in the background that appear to move unnaturally

- Changes in focus or depth of field that don’t make logical sense

- Backgrounds that appear slightly out of focus while the face remains sharp (or vice versa) in ways that defy camera physics

- Spatial inconsistencies where the person’s position relative to background elements changes illogically

The Audio Dimension: Detecting Deepfake Voices

Voice deepfakes deserve special attention because many people focus primarily on visual elements while ignoring audio cues.

Audio Red Flags

Recognizing Manipulated Audio:

- Uneven sentence flow or unnatural pacing

- Vocal inflections that sound robotic or emotionally flat

- Strange vocal tones or unusual phoneme pronunciation

- Background noise that seems artificially added or inconsistent

- Audio that sounds unusually polished or “too clean” in ways that seem unnatural

- Breathing patterns that are inconsistent or artificially placed

- Pitch variations that don’t align with emotional content

Statistics on Audio Deepfakes: Voice deepfakes have surged 680% in recent years. Research combining CNN (Convolutional Neural Networks) and LSTM (Long Short-Term Memory) networks achieved 98.5% accuracy in detecting audio deepfakes using the DEEP-VOICE dataset.

The Vishing Threat

A particularly alarming trend is voice-based phishing (vishing) using deepfake audio. Deepfake-enabled vishing attacks surged by 1,633% in Q1 2025 compared to Q4 2024. These attacks use cloned voices to impersonate company executives, bank officials, or family members to manipulate victims into revealing sensitive information or transferring funds. The median loss per vishing victim is approximately $1,400, though the largest reported single incident involved losses of $25 million.

Advanced Detection: What Researchers and AI Systems Can Identify

While humans can catch obvious deepfakes, sophisticated manipulations often require technological assistance. Here’s what advanced detection systems look for:

1. Frequency Domain Anomalies

Deepfakes created through generative upsampling leave detectable traces in the frequency domain. When analyzed using Fourier Transform—a mathematical technique that breaks images into frequency components—AI-generated content shows distinctly different frequency patterns compared to natural video.

2. Facial Micro-Expression Inconsistencies

Real humans display unconscious micro-expressions lasting 1/25th to 1/5th of a second. These involuntary expressions are nearly impossible to replicate in deepfakes. AI detection systems trained to identify these micro-expressions achieve high accuracy rates.

3. Temporal Inconsistencies

A breakthrough approach called ReStraV (Representation Straightening Video) analyzes how video trajectories behave in neural representation space. The research, published by Google DeepMind, demonstrates that real-world video trajectories become more “straight” in the neural representation domain, while AI-generated videos exhibit significantly different curvature and distance patterns. This method achieved 97.17% accuracy and 98.63% AUROC (Area Under the Receiver Operating Characteristic Curve) on the VidProM benchmark—substantially outperforming existing detection methods.

4. Cross-Modal Analysis

Sophisticated detection systems combine multiple modalities—analyzing audio, video, and metadata simultaneously. Research shows that multimodal approaches combining deep learning and ensemble fusion methods significantly outperform single-modality detection.

Detection Tools Available to the Public in 2025

You don’t need to be a technical expert to leverage AI-assisted deepfake detection. Several powerful tools are now available:

Sensity AI Platform

Accuracy: 95-98% detection rate

Features:

- Analyzes video, image, audio, and AI-generated text

- Multi-layer approach examining pixels, file structure, and voice patterns

- Provides forensic-grade reports with confidence scores and visual indicators

- Monitors over 9,000 sources for malicious deepfake activity in real-time

- Tracks over 500 high-risk sources for deepfake proliferation

- Results delivered within seconds

Google’s SynthID Detector

Features:

- Detects SynthID watermarks embedded in content generated by Google’s AI tools (Gemini, Imagen, Lyria, Veo)

- Over 10 billion pieces of content already watermarked with SynthID

- Works with images, audio, video, and text

- Cloud-based portal (currently available via waitlist)

- Specifies exact segments where watermarks are detected

- Universal language support

Important Limitation: SynthID only detects content created by Google’s own AI models. Deepfakes created with other tools (Sora, Runway, Kling, etc.) won’t be flagged by SynthID unless they contain SynthID watermarks from Google’s ecosystem.

InVID Verification Tools

InVID Verification Application:

- Web-based integrated toolset for journalists and fact-checkers

- Reverse video search on YouTube and a dedicated InVID repository

- Keyframe extraction and analysis

- Metadata examination (upload date, location, creator information)

- Logo detection and copyright verification

- Social media contextual analysis

- Forensic filtering on still images

InVID Verification Plugin:

- Browser extension for Chrome

- Real-time verification on Facebook and YouTube

- Keyframe fragmentation from multiple platforms (Instagram, TikTok, X/Twitter, DailyMotion)

- Image enhancement tools

- Metadata reading capabilities

Microsoft Video Authenticator

Uses AI to analyze still photos and video frames, assigning confidence scores indicating whether content is likely manipulated. Detects subtle fading or blending at face boundaries.

The Reality Check: Critical Questions to Ask

Before sharing or believing a news video, ask yourself these essential questions:

Source Verification:

- Did the video come from a verified official account or reputable news organization?

- Is the account relatively new, or has it been active for years?

- Does the account have proper verification badges?

- Has the original content source confirmed this video’s authenticity?

Content Analysis:

- Is there manufactured urgency or emotional manipulation designed to make you share without thinking?

- Does the video serve a political or financial agenda?

- Has the story been independently verified by multiple credible news organizations?

- Are there alternative explanations for what you’re seeing?

Platform Context:

- Is the video being shared primarily on social media rather than official news channels?

- Are reputable fact-checkers or news organizations addressing this content?

- Has the video been flagged or labeled by the platform?

- Are the comments or engagement patterns suggesting skepticism?

The Statistics That Matter: What The Data Reveals

Recent research provides compelling evidence about deepfake proliferation and detection challenges:

- Growth Rate: Deepfake files surged from 500,000 in 2023 to a projected 8 million in 2025

- Fraud Escalation: Fraud attempts with deepfakes have increased by 2,137% over the last three years

- Spear Phishing: Deepfake spear-phishing attacks have surged over 1,000% in the last decade

- Election Impact: In 2024-2025, political deepfakes became a fixture in electoral discourse, with significant implications for democratic processes

- User Detection Capability: Manual detection accuracy ranges from 60-64% when untrained individuals try to identify deepfakes, indicating that most people cannot reliably detect sophisticated manipulations

- Model Accuracy: State-of-the-art detection models like VGG19 achieve over 96% accuracy for identifying deepfakes created with StyleGAN2, StyleGAN3, and ProGAN

Legislation and Platform Responses

Governments and platforms are beginning to respond to the deepfake crisis. India’s Election Commission warned political parties against misusing AI to create deepfakes during the 2025 elections, requiring that AI-generated content be prominently labeled as “AI-Generated,” “Digitally Enhanced,” or “Synthetic Content”. The Indian government has also requested that tech companies obtain explicit permission before launching generative AI tools and has warned against responses that could threaten electoral integrity.

However, the response remains inadequate. The Meta failure during Ireland’s 2025 election demonstrates that major platforms’ AI detection systems still miss sophisticated manipulations. Regulatory frameworks lag far behind technological capabilities, creating a dangerous window where deepfakes can proliferate before being removed.

Protecting Yourself: Practical Action Steps

Immediate Actions:

- Verify before sharing: Always check news videos through official sources and multiple credible news organizations before sharing

- Use detection tools: Run suspicious videos through Sensity AI or similar platforms

- Check metadata: Examine the video’s upload date, source channel, and engagement patterns

- Reverse image search: Use Google Lens or TinEye to find original sources

- Report suspicious content: Flag deepfakes on social media platforms

- Enable verification: Use platform verification features when available

Long-term Awareness:

- Stay skeptical: Approach sensational or emotionally charged videos with heightened scrutiny

- Follow trusted sources: Rely on verified news organizations with editorial standards

- Educate others: Share this information with family and colleagues

- Monitor updates: Deepfake technology and detection methods evolve rapidly—stay informed

- Support regulation: Advocate for AI labeling requirements and platform accountability

Conclusion: The Future of Digital Authentication

The deepfake crisis represents a fundamental challenge to information integrity in the digital age. With deepfake incidents doubling monthly and financial losses exceeding $1.5 billion, the stakes have never been higher. However, detection technology continues to advance—models now achieve 96-98% accuracy rates, and tools like Google’s SynthID and Sensity AI provide accessible detection capabilities to the general public.

The reality is that no single indicator definitively proves a video is a deepfake. Instead, accumulating evidence—unnatural eye movements, audio-visual mismatches, lighting inconsistencies, and behavioral anomalies—creates a comprehensive picture. When combined with platform verification features, official source confirmation, and AI detection tools, you can significantly reduce the risk of being fooled by manipulated content.

As artificial intelligence capabilities continue to advance, your best defense remains a combination of critical thinking, technological literacy, and healthy skepticism toward sensational content. In an era where seeing is no longer believing, verification has become more important than ever.

Read More:AI Video Upscalers: The Ultimate Guide to Turning Old 480p Footage into Stunning 4K

Source: K2Think.in — India’s AI Reasoning Insight Platform.