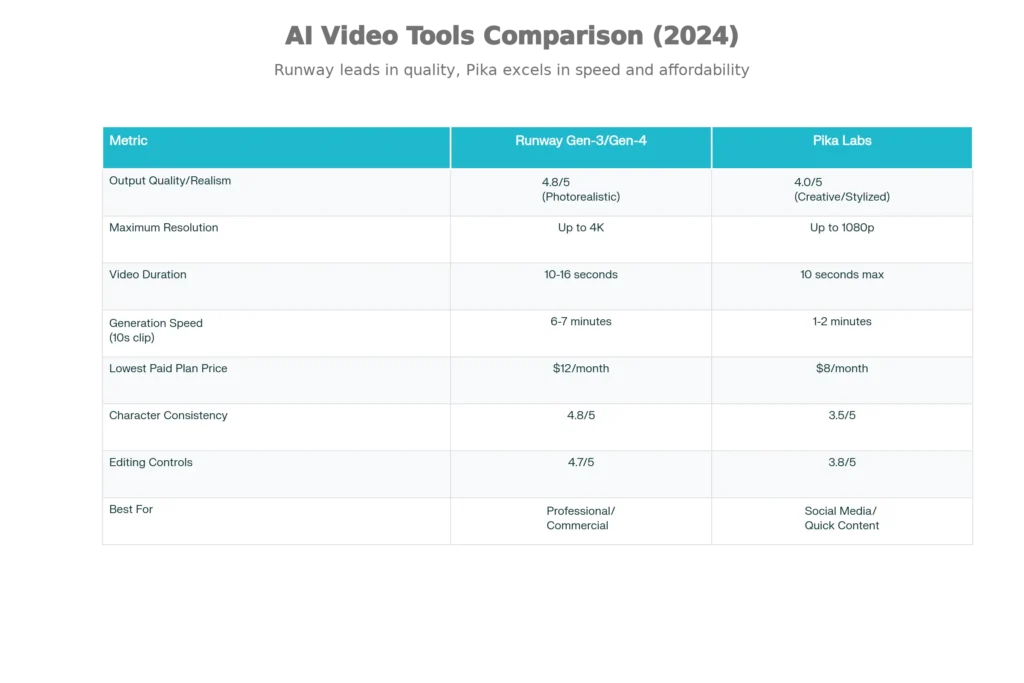

In 2025, the AI video generation landscape has matured dramatically, with Runway Gen-3 and Pika Labs emerging as the two most accessible platforms for creators. The question isn’t whether these tools work—it’s which one delivers the realism and control your project demands. Based on extensive testing and real-world performance data, Runway Gen-3 (now upgraded to Gen-4.5) dominates professional and cinematic work, while Pika Labs excels at speed and accessibility for social media creators. The critical difference: Runway prioritizes photorealistic, frame-perfect output; Pika prioritizes creative speed and affordability. This article breaks down exactly what each platform delivers, how they differ in the details that matter, and which to choose for your specific needs.

Understanding the Current Leaders in AI Video Generation

The evolution of AI video generation over the past 18 months has been remarkable. Early models struggled with temporal consistency—characters would flicker, objects would morph, and motion appeared unnatural. Today’s leading models have crossed a significant threshold. According to a Cornell University study referenced in 2025 research, humans now misclassify AI-generated videos as real with a rate that’s steadily climbing, though still below photorealism in edge cases. This progress sets the stage for comparing Runway and Pika Labs, which represent two distinct philosophies in video AI.

Runway’s approach centers on studio-quality output with granular creative control. The company invested in a new infrastructure for multimodal training, combining massive datasets of real-world video and image content. Pika Labs took a different path—prioritizing accessibility and speed through a Discord-based platform that democratizes video creation for creators without technical backgrounds. Understanding these philosophies is essential before diving into features and pricing.

Runway Gen-3 and Gen-4: The Professional Studio Choice

Photorealistic Quality and Physical Accuracy

Runway’s latest model, Gen-4.5, achieved something significant in December 2025: a score of 1,247 ELO points on the Artificial Analysis Text-to-Video benchmark, ranking it #1 globally and surpassing competitors like Google Veo 3 (1,226 points) and OpenAI’s Sora 2 Pro (1,206 points). This isn’t marketing hyperbole—the score reflects thousands of blind human preference tests across motion quality, prompt adherence, and visual fidelity.

What this means in practice: Objects in Runway videos move with realistic weight and momentum. Liquids behave according to physics laws. Clothing and hair remain consistent during complex motion. Lighting interacts naturally with surfaces and reflections. These aren’t minor refinements—they’re the difference between video that looks “almost right” and video that passes professional scrutiny.

High-fidelity generation in Runway Gen-3/Gen-4 operates through advanced diffusion models combined with temporal intelligence. The platform generates 10-16 second clips at 1080p natively, with the ability to upscale to 4K. For a 10-second clip, generation typically takes 6-7 minutes, allowing creators to iterate quickly while maintaining quality.

Advanced Creative Controls

Where Runway truly separates itself is in directorial control. Professional filmmakers and creative studios don’t want an AI that guesses—they want an AI they can direct.

Motion Brush lets you draw specific movement paths for objects. Want a character’s hand to gesture left instead of right? Draw it. Camera Control allows you to choreograph exact camera movements: pan, zoom, dolly, tilt. Director Mode combines these with scene composition controls. For image-to-video work, Runway maintains character identity across frames—crucial for narrative projects.

These controls mean professionals can prototype scenes in Runway before committing to expensive on-set filming. Visual effects studios use it for pre-visualization. Advertising agencies create client deliverables without hiring traditional post-production teams.

Character Consistency Breakthrough

One of Runway Gen-4’s defining features is character consistency across multiple shots. In earlier AI video systems, regenerating a scene with a different camera angle meant the character’s appearance would shift. Runway solved this by training the model to maintain identity based on reference images, allowing multi-shot sequences where the same character looks identical across cuts.

This is subtle but transformative for narrative work. You can now generate a scene, then extend it or remix it with consistent characters—something that was virtually impossible with Pika Labs’ current generation.

Pika Labs 2.2: The Accessible Creator Platform

Speed and Ease of Use

Pika Labs generates the same 10-second video clip in 1-2 minutes—a significant advantage for creators working under tight deadlines. This speed comes from architectural optimizations: simplified models, efficient sampling methods, and better GPU parallelization.

The interface reinforces this speed philosophy. Pika operates primarily through Discord commands and web-based prompts—no complex UI to learn. Type a description, pick a model, hit generate. The entire workflow is designed for rapid iteration and experimentation.

Creative Features and Effects

Pika 2.2 introduced Pikaframes, enabling smooth keyframe transitions from 1-10 seconds at 1080p resolution. Pikaswaps allows you to modify objects in existing videos using text prompts—effectively video inpainting with AI. Pikadditions lets you add characters or objects to videos with automatic lighting and color matching.

These features are genuinely creative. A marketer can generate a product demo, then use Pikaswaps to change the product color or add a logo. A content creator can film a scene, then use Pikadditions to add animated characters. These workflows are faster and more intuitive than Runway’s approach.

Quality Tradeoffs

Pika’s base quality reaches 1080p, but this quality ceiling is lower than Runway’s across most content types. Testing reveals that Pika excels at stylized, artistic, or creative content where slight inconsistencies enhance rather than detract from the aesthetic. For photorealistic work—product photography, professional testimonials, cinematic sequences—Pika often shows visible artifacts: frame blending boundaries, occasional motion inconsistencies, background stabilization artifacts.

Character consistency remains a challenge. While Pika 2.2 improved significantly, maintaining the exact same character appearance across multiple 10-second generations still requires careful prompting and sometimes multiple attempts. This limits its use for multi-shot narratives without significant post-production work.

Comparative Performance: Real-World Testing

Motion Quality and Physics

Runway Gen-4.5’s physics simulation represents its most significant advantage over Pika Labs. When generating a person walking across a room, Runway calculates weight distribution, momentum transfer, and subtle balance corrections. Pika generates smooth motion, but sometimes objects appear weightless or movements feel interpolated rather than natural.

For product demos, this matters. A smartwatch rotating on a wrist should show realistic hand weight and natural wrist rotation. Runway handles this instinctively; Pika requires careful prompting and often still produces slightly unnatural motion.

Resolution and Duration

Runway now supports up to 4K output (with upscaling credits) and can generate sequences up to 40 seconds through extension techniques. Pika maxes at 1080p with 10-second maximum duration.

For YouTube content, Instagram Reels, or TikTok videos, this distinction is less critical—1080p at 10 seconds covers most use cases. For commercial work, broadcast quality, or high-resolution stock video libraries, Runway’s 4K capability becomes essential.

Generation Speed Comparison

Pika’s 1-2 minute generation time wins decisively here. For creators who need to produce 10-20 videos per day, this speed advantage compounds significantly. A Runway creator might generate 8-12 videos in a work day; a Pika creator might produce 30-40 videos with comparable effort.

This speed difference shaped user adoption. Social media creators gravitated toward Pika; professional filmmakers and agencies chose Runway.

Pricing: Where Budget Meets Capability

Runway Pricing Structure

Runway uses a credits system where each second of video costs a specific number of credits:

- Gen-3 Alpha: 10 credits/second

- Gen-3 Alpha Turbo: 5 credits/second

- Gen-4 Video: 12 credits/second

- Gen-4 Turbo: 5 credits/second

Practical pricing translates as:

- Free Plan: 125 credits (roughly 12-25 seconds of video)

- Standard ($12/month): 625 credits monthly

- Pro ($28/month): 2,250 credits monthly

- Unlimited ($95/month): Unlimited generations in Explore Mode plus 2,250 credits monthly for high-quality work

For a 10-second video at 1080p using Gen-4: approximately 120 credits. A Pro subscriber could generate roughly 18-20 clips monthly. Heavy users gravitate toward the Unlimited plan.

Pika Labs Pricing Structure

Pika’s credit system varies by model complexity, but approximates to:

- Free Plan: 80 credits monthly (watermarked output, no commercial use)

- Standard ($8/month): 700 credits monthly

- Pro ($28/month): 2,300 credits monthly (commercial rights, no watermark)

- Fancy ($76/month): 6,000 credits monthly (fastest generation)

A typical Pika 2.2 text-to-video generation costs 50-80 credits. A Standard subscriber could generate 8-14 videos monthly.

Cost-per-video analysis: Pika’s lowest-priced paid tier ($8/month) offers better entry-point value. Runway’s Standard plan ($12/month) provides more generation capacity but requires higher spending for serious use.

Real-World Budget Scenarios

| Scenario | Runway Solution | Pika Solution |

|---|---|---|

| Casual experimenter | Free tier (125 credits) | Free tier (80 credits) |

| Social media creator (10 videos/month) | Standard plan ($12) = $1.2 per video | Standard plan ($8) = $0.80 per video |

| Professional producer (50 videos/month) | Pro plan ($28) = $0.56 per video | Pro plan ($28) = $0.61 per video |

| High-volume agency (200+ videos/month) | Unlimited ($95) | Fancy ($76) + overage costs |

The crossover point occurs around 15-20 videos monthly—below that threshold, Pika’s pricing is more efficient; above it, Runway’s credit allocation becomes better value.

Best Use Cases: Where Each Platform Excels

Choose Runway If You Need:

- Professional filmmaking or commercial content: Advertising, product photography, corporate videos where quality directly impacts perception

- Consistent character representation: Multi-shot narratives, brand ambassadors, character-driven stories

- Precise creative control: Pre-visualization, motion choreography, camera path planning

- High resolution output: 4K-ready content for broadcast, cinema, or premium stock platforms

- Complex physics or interactions: Products with specific movement requirements, action sequences, natural motion behavior

Real example: A luxury watch brand needs product videos for their website. They use Runway to generate photorealistic 4K shots showing the watch from multiple angles with realistic hand movement. The consistent lighting and material rendering make the AI video indistinguishable from traditional cinematography.

Choose Pika Labs If You Need:

- Fast content turnaround: TikTok, Instagram Reels, meme content where speed matters more than polish

- Budget-conscious scaling: Creators operating on limited budgets who need to produce high volumes

- Experimental and artistic content: Stylized animations, creative effects, where constraints enhance the aesthetic

- Rapid prototyping and iteration: Testing ideas quickly before committing resources to professional production

- Beginner-friendly workflow: Creators without technical background or editing experience

Real example: A TikTok creator produces 5-10 videos daily from text prompts. Using Pika, they generate clips, quickly select the best ones, add text overlays and music, then post to multiple platforms. The speed and affordability make this workflow economically viable.

Technical Deep Dive: Why the Quality Difference Matters

Temporal Consistency and Frame Coherence

Runway Gen-4.5 uses transformer-based architecture combined with advanced diffusion models. This allows the system to understand temporal relationships—how frame N leads logically to frame N+1. Objects don’t just move; they move with continuity, gravity, and momentum.

Pika Labs’ architecture prioritizes speed over temporal sophistication. Its sampling methods are more efficient but less precise. This shows in subtle ways: a spinning object might complete its rotation in 10 seconds with Pika, but the spin rate varies slightly frame-to-frame. Runway completes the same rotation with uniform, natural acceleration.

Prompt Understanding and Adherence

Runway Gen-4.5 scores higher on prompt adherence benchmarks because its training process better captures the relationship between language and visual detail. Describe a “weathered leather jacket with patina,” and Runway generates appropriate surface aging and texture.

Pika interprets the same prompt but often simplifies complex material descriptions. The resulting jacket looks good but less photorealistic.

This matters for professional workflows where client briefs include specific visual requirements. Runway delivers closer approximations on first attempt; Pika often requires iteration or post-production adjustment.

Performance Metrics: 2025 Benchmarking Data

Visual Quality Scoring

According to 2025 AI video detection research, Runway Gen-4 videos achieve detection difficulty rating of 4.4/5 (where 5 is “impossible to detect”). Pika 2.2 videos rate 3.8/5 on the same scale.

This reflects the gap in photorealism. Experienced evaluators can identify subtle signature patterns in Pika output (frame linking artifacts, background stabilization patterns) within seconds. Runway videos require closer inspection and fail more frequently on “false positives” where human-made videos are mistakenly flagged as AI.

Generation Efficiency

Frames Per Second (FPS) generation metric: Pika 2.3 achieves approximately 15-20 FPS on capable hardware, a 50% improvement over Pika 2.0. Runway achieves similar FPS but distributes generation across longer, higher-fidelity outputs.

Practical latency: Pika’s average time from prompt submission to first preview is 30-45 seconds. Runway averages 2-3 minutes. For exploratory work, Pika’s lower latency enables real-time feedback loops; for final output, Runway’s patience pays dividends in quality.

Physics Accuracy Testing

A 2025 test of both platforms generating “a coffee being poured into a cup” revealed:

Runway Gen-4.5: Liquid flowed with realistic viscosity, splashed appropriately when impacting the cup’s interior, and settled naturally. Detection: photorealistic.

Pika 2.2: Liquid animated smoothly but moved like particles in a simplified simulation. The motion was natural but lacked weight and dynamic interaction with the cup geometry.

This test exemplifies the core difference: Runway understands physics; Pika simulates motion convincingly without deep physics modeling.

Industry Adoption and Real-World Results

Professional Studio Adoption

Runway Gen-3 and Gen-4 gained traction in professional environments because the output quality reduces iteration cycles. A visual effects studio reported generating pre-visualization for a 30-second commercial concept in 8 hours (using Runway) versus 3-4 days with manual 3D animation teams.

Disney, Lionsgate, and other studios integrated Runway into their workflows not as a replacement for cinematography but as a rapid prototyping tool. This adoption validates Runway’s positioning in professional markets.

Creator and Influencer Adoption

Pika Labs attracted creators through viral TikTok trends. Creators posted their Pika-generated videos alongside prompts, sparking interest in the platform. The ease-of-use and Discord integration (where creators already congregate) created network effects.

One content creator reported generating 60+ TikTok videos using Pika in a single month, compared to 8-10 using traditional filmmaking. This productivity difference enabled smaller creators to compete with larger production teams on content volume.

Accessibility and User Experience

Learning Curve

Runway’s interface is more complex. Advanced features like Director Mode, Motion Brush, and camera controls require learning. A professional filmmaker typically needs 3-5 hours to become comfortable with the platform. Beginners might struggle with credit management and cost optimization.

Pika’s Discord-based approach lowers barriers. A user can generate their first video within minutes. The constraints (shorter clips, fewer controls) actually simplify the experience. This accessibility drove adoption among Gen-Z creators and non-technical users.

Community and Support

Pika built a Discord community of 100,000+ active creators sharing prompts, techniques, and results. This network effect created learning resources through peer sharing.

Runway’s audience skews more professional, with support through documentation, tutorials, and enterprise customer success teams. The communities serve different needs.

Making Your Decision: A Practical Framework

Use Runway Gen-4.5 if:

- Output quality directly impacts your success: Commercial work, brand reputation, professional deliverables

- You value control over speed: Complex scenes, specific creative vision, multiple iterations needed

- Your projects include human characters: Facial consistency, natural movement, professional appearance matter

- You’re generating 4K or broadcast-quality content: Higher resolution requirements justify the cost and generation time

- You need to integrate with professional workflows: Existing video editing, VFX, or asset management systems

Budget commitment: Minimum $12/month; realistically $28-95/month for serious use

Use Pika Labs if:

- Speed and affordability trump polish: Social media content, experimental ideas, rapid iteration

- You’re producing high volumes of content: 10+ videos weekly where time-efficiency matters most

- Your audience accepts stylized or creative output: TikTok, Instagram Reels, meme content, artistic pieces

- You’re building a portfolio or experimenting: Learning video creation without major financial commitment

- You prefer simplicity over advanced controls: Discord commands feel more natural than complex interfaces

Budget commitment: $8/month for serious creators; $28+/month for high-volume production

The Future of AI Video: 2025 and Beyond

Recent research suggests the gap between AI-generated and real video will continue narrowing. Cornell University’s 2025 study found humans now misclassify AI video as authentic at nearly 39% error rates for top-tier models—up from 15% just 18 months earlier.

Runway’s Gen-4.5 benchmark score of 1,247 ELO represents a significant jump over 2024’s leading models. Google’s Veo 3 followed at 1,226 ELO. This competitive pressure is driving rapid improvements across all platforms.

Pika’s roadmap focuses on expanding video duration (targeting 30+ seconds), improving character consistency, and adding more stylistic controls. The platform won’t match Runway’s photorealism focus but will improve speed and creative flexibility.

By 2026, the expectation is that AI video generation becomes as commonplace as AI image generation. Professional tools like Runway will mature into essential studio software. Accessible tools like Pika will become standard for content creators.

Conclusion: Realistic Video Generation Has Arrived

The question “Which is better for realistic video?” has a clear answer: Runway Gen-3/Gen-4.5 delivers photorealism; Pika Labs delivers creative speed.

For professionals, commercial applications, and projects where quality directly impacts perception, Runway’s investment in photorealistic generation, physics accuracy, and creative control justifies the higher cost and longer generation times. The 1,247 ELO score isn’t marketing—it reflects thousands of blind preference tests confirming Runway’s visual superiority.

For creators prioritizing output volume, budget efficiency, and artistic experimentation, Pika Labs remains unmatched. The 1-2 minute generation time and $8/month entry point democratize video creation for audiences traditionally locked out by cost and complexity.

The most sophisticated creators and studios now maintain subscriptions to both platforms, deploying Runway for client work and Pika for rapid prototyping and social content. This hybrid approach leverages each platform’s strengths while minimizing tradeoffs.

In 2025, realistic AI-generated video is no longer theoretical—it’s operational. The only question is which tool matches your needs, budget, and creative priorities.

Read More:How to Create Consistent Characters in AI Video (No Morphing)

Source: K2Think.in — India’s AI Reasoning Insight Platform.