In 2025, the question “Can teachers detect AI?” has become as important as teaching itself. With 68% of teachers now using AI detection tools, according to K-12 Dive, educators face a critical challenge: distinguishing genuine student work from AI-generated content while avoiding catastrophic false accusations. The reality is more complex than the marketing claims suggest. Most AI detectors claim 95-99% accuracy, yet independent research reveals a murkier picture of flawed tools, evasion techniques, and innocent students being wrongly flagged for academic misconduct.

This article examines the current landscape of AI detection in 2025—the tools teachers rely on, their actual performance, vulnerabilities to new attack methods, and most importantly, practical strategies that go beyond algorithms to protect academic integrity fairly.

Understanding How AI Detectors Actually Work

Modern AI detection tools don’t identify AI the way you might think. They don’t compare your text to a database of known ChatGPT outputs (though some do this too). Instead, they analyze linguistic patterns that tend to appear in machine-generated text.

The two most common metrics are:

Perplexity: How unpredictable or “random” the word choices are. AI models tend to pick more statistically predictable words, while humans naturally surprise us more.

Burstiness: Variation in sentence length and structure. Human writing naturally fluctuates—short punchy sentences followed by longer, complex ones. AI writing tends to be more uniform.

When you put text through a detector like GPTZero or Originality.ai, the tool measures these patterns against billions of training examples and assigns a probability score. Simple, right? Not quite.

The problem is that human writing varies enormously by context, culture, and writer. A student translating their thoughts into formal academic English might produce text that statistically resembles AI output. A non-native English speaker writing methodically might get flagged. Someone with neurodivergence who naturally structures writing in repeating patterns could be accused of cheating.

The Accuracy Problem: What Tools Actually Claim vs. What They Actually Do

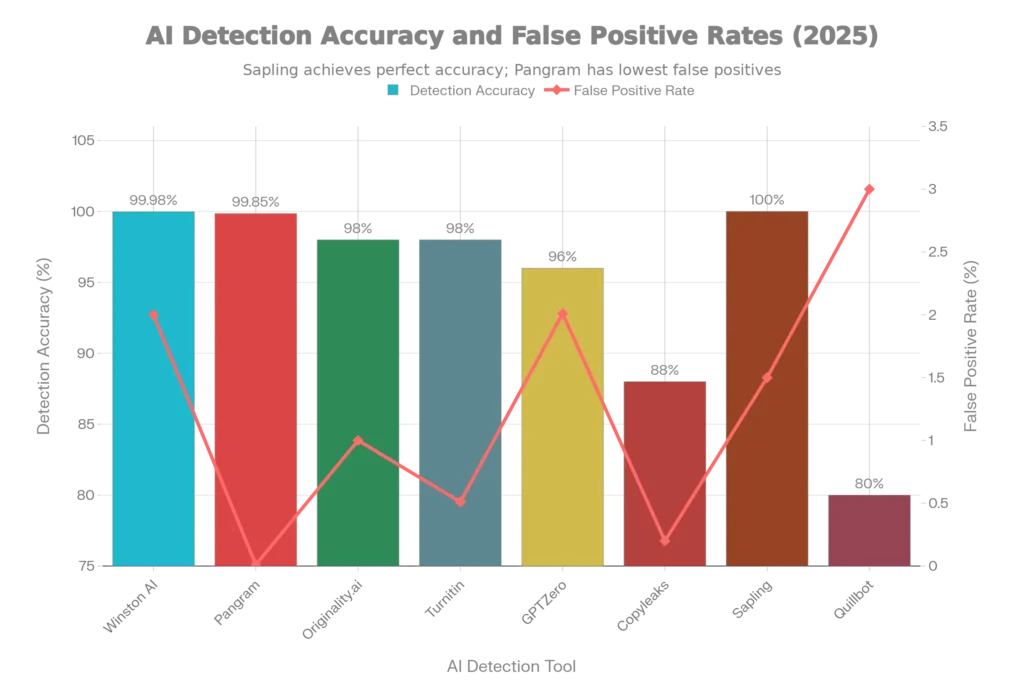

The chart above reveals a significant gap between marketing claims and real-world performance.

Top performers in 2025:

- Winston AI: Claims 99.98% accuracy with a 2% false positive rate on pure AI text

- Pangram: The lowest false positive rate at 0.01% (1 in 10,000), with 99.85% accuracy, according to Nature

- Originality.ai: Reports 98% accuracy with around a 1% false positive rate

- Turnitin: Claims 98% accuracy but admits a false positive rate between 0.51% and 4%, depending on configuration

- GPTZero: Achieves 96% accuracy on short-form content and 94% on longer pieces, but independent testing found a real-world false positive rate of 2.01%—double what the company claims

But here’s the catch: A 1% false positive rate sounds acceptable until you do the math. In a high school of 2,000 students each submitting one essay, that “1%” means 20 innocent students get falsely accused. At a large university with 100,000 submissions annually, a 1% false positive rate becomes 1,000 wrongly flagged submissions.

Academic research paints an even grimmer picture. A 2025 Stanford University study analyzing over 10,000 text samples found false positive rates exceeding 20% for non-native English speakers and creative writing. The Journal of Educational Technology documented that Originality.ai incorrectly identified 15% of certain text types as AI-generated.

A particularly troubling finding from NIH research: When academic abstracts were tested with multiple detectors, the results were wildly inconsistent. ZeroGPT gave an original published article a 27.55% AI likelihood score, while GPTZero rated the same human-authored text at only 5.88%. Different tools, dramatically different conclusions on identical text.

The Real Killer: Evasion Techniques Render Detection Useless

The worst part? Teachers don’t need to know about the false positive problem firsthand. Because according to recent academic research, sophisticated evasion techniques can completely disable even state-of-the-art detectors.

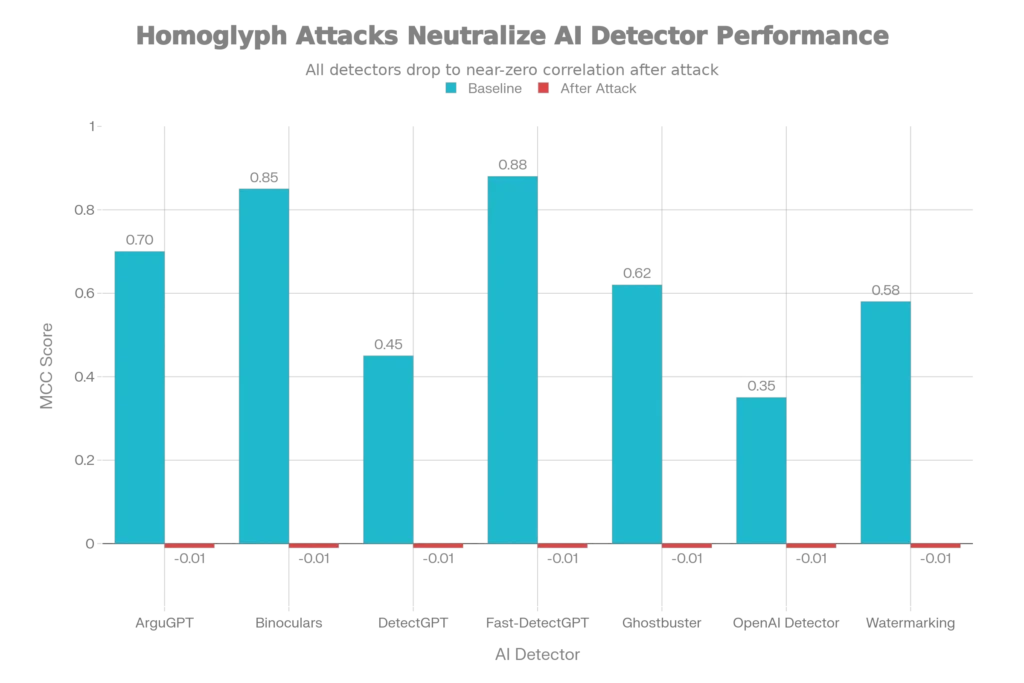

The chart above shows something alarming: homoglyph attacks—where students replace standard letters with nearly identical Unicode lookalikes (like replacing the Latin ‘a’ with the Cyrillic ‘а’)—can reduce detector effectiveness from near-perfect to completely broken. Research presented at the 2025 Workshop on GenAI Content Detection found that homoglyph attacks drop the Matthews Correlation Coefficient (a measure of detector accuracy) from an average of 0.64 to -0.01 across all tested detectors, including ArguGPT, Binoculars, DetectGPT, Fast-DetectGPT, Ghostbuster, and even OpenAI’s own detector.

What does that mean in plain English? A motivated student could make an AI-generated essay completely undetectable by just using invisible character substitutions. The paper, published by legitimate researchers, explicitly noted that this attack “can effectively circumvent state-of-the-art detectors, leading them to classify all texts as either AI-generated or human-written”.

Teachers using these tools have no way of knowing their detector was defeated. The essay looks perfect, gets a normal score, and nobody’s the wiser.

Comparing the Best Free Tools Available in 2025

If schools can’t afford enterprise solutions, what are the realistic options? Here are the most commonly recommended free or freemium detectors:

ZeroGPT – According to 2025 testing by Hastewire, ZeroGPT achieved 92% accuracy on a diverse dataset, with the fewest false positives among free tools. It correctly identified 98 out of 100 pure AI samples and maintained reasonable accuracy on hybrid human-AI content. The interface is straightforward: students copy their text, click analyze, and get a percentage score with sentence-level breakdown.

GPTZero – Arguably the most popular among educators, GPTZero achieved 96% accuracy on short-form content and 94% on longer essays in independent testing, though real-world false positive rates are around 2.01%. It’s particularly effective for detecting unedited ChatGPT output but struggles with paraphrased content. The free tier limits you to five scans per month but gives full features.

Quillbot AI Detector – Tested at 80% accuracy, Quillbot offers a simpler alternative aimed at students and writers. The free version provides basic detection without complex interfaces. However, its lower accuracy makes it best used as a supplementary tool rather than primary verification.

Sapling – Known for near-perfect accuracy on pure AI text (100% on ChatGPT in some tests) and exceptional performance on human writing with minimal false positives, Sapling is lightweight and browser-based. It’s ideal for teachers wanting quick checks without registration.

Copyleaks AI Content Detector – Available free with limited scans, Copyleaks combines AI detection with plagiarism checking. In independent testing, it achieved around 88% overall accuracy but struggles with creative writing styles. The education integrations (LMS plugins) are valuable for schools considering institutional adoption.

The key finding: no free detector is simultaneously excellent at accuracy, avoids false positives, and works across all writing types. Every tool involves tradeoffs.

When AI Detectors Get It Catastrophically Wrong: The Human Cost

The abstract statistics hide real suffering. In 2024-2025, documented cases of false accusations ranged from professional consequences to academic expulsion threats.

Case at University X (2025): Out of 142 essays submitted in a psychology class, an AI detector flagged 28 submissions—roughly 20%—as AI-generated. Independent audit later confirmed that 22 of these 28 essays were human-written. One student, Mia Chen, described receiving the accusation email: “I felt my world crumble. I’d poured my soul into that paper, and suddenly I was a cheater.” The ordeal included mandatory integrity workshops, referrals to academic conduct offices, and grade penalties before her work was ultimately exonerated.

False accusations in professional settings: A tech firm suspended an employee based on an AI detector falsely flagging a project report. A legal assistant in a corporate law office was demoted after a false positive from detection software. Both cases resulted in reputational damage and psychological harm that extended beyond the immediate accusation.

Reddit testimonies: Across education forums, students report receiving Fs on assignments they definitely wrote themselves, with AI detection as the only “evidence.” One student received a C on a paper they typed independently; another got a zero despite writing their work.

The pattern is clear: When an AI detector says “guilty,” students face life-altering consequences while the accusation is still technically unproven.

Best Practices for Teachers: Beyond Tools, Toward Fairness

Rather than relying on imperfect detection software as judge and jury, the most effective educators combine multiple approaches—tools as circumstantial evidence, not proof.

1. Ask Students to Explain Their Work

One of the simplest and most effective checks: Have a conversation. Ask a student to explain their reasoning, walk you through the essay’s argument, or answer follow-up questions. Someone who used AI to write the essay will struggle to elaborate on nuances or defend fabricated details. This oral examination works because the conversation doesn’t need to be accusatory—it’s part of authentic assessment.

Example: After receiving an essay, ask the student to identify the three strongest sources they used and explain why one surprised them. If the student can’t retrieve this information without rereading their own paper, that’s a red flag worth investigating further.

2. Compare Against the Student’s Writing History

Sudden shifts in tone, vocabulary, and structure are suspicious—but only in context. If a student has been writing informal, casual analyses all semester and suddenly submits a piece with academic formality and perfect syntax, that warrants investigation.

The key: Look for inconsistency as a pattern, not absolute quality. A student improving their writing is normal. A student’s work becoming unrecognizable is the actual red flag.

3. Design Assignments AI Can’t Fake

This is the hardest but most effective approach: redesign what you ask students to do.

Instead of “Write an essay on climate change,” try:

- “Write a personal reflection connecting climate change to an experience in your community”

- “Debate your classmate’s position on climate policy in real-time (recorded or in-class)”

- “Create a drawing or diagram explaining a concept, with written annotations”

- “Submit an outline, draft, and revision with dated timestamps showing your thinking process”

Teachers at High Tech High report that when students are genuinely engaged in project-based learning on topics they’re passionate about, they don’t want to use AI to shortcut the work. The investment in authentic learning reduces motivation to cheat.

4. Use AI Detectors as Part of a Larger Verification Process, Not Proof

If you do use detection tools, treat results as circumstantial evidence requiring human review—never as automatic grounds for accusation.

Implementation approach:

- Run flagged work through multiple detectors. If only one tool flags it, that’s weak evidence

- Request the student revise the flagged section and explain their revision process

- Conduct an oral exam covering the work’s content

- Review the student’s previous submissions for consistency

- Consider the student’s background—non-native English speakers, neurodivergent students, and others with naturally structured writing styles face disproportionate false positives

Never, under any circumstances, should an AI detector result alone determine consequences. The tools are too flawed, the stakes too high.

5. Teach AI Literacy, Not Prohibition

Educators increasingly recognize that fighting AI is futile—it’s ubiquitous and students will encounter it throughout their careers.

Instead, educators at leading institutions teach responsible AI use:

- Discuss when AI is helpful (brainstorming, editing for clarity) versus when it’s harmful (substituting for original thinking)

- Require students to cite AI assistance like any other source

- Have students generate AI text, then critique its limitations and inaccuracies

- Build lessons around AI bias, hallucinations, and how to verify AI outputs

This approach, supported by Frontier in Education research, shows that students who understand AI’s strengths and limitations make more ethical choices than students taught to fear detection.

The Uncomfortable Truth: Teachers Can Detect AI, But It’s Complicated

Can teachers detect AI in 2025?

Yes—but not through tools alone.

The best teachers use AI detectors as one signal among many, combined with professional judgment developed through knowing their students’ writing over time, designing assessments that require authentic engagement, and maintaining transparent conversations about AI use.

Current detection tools will improve. Researchers are developing more robust models, and companies are refining algorithms. But for now, no tool achieves 100% accuracy, and every tool carries the risk of devastating false positives. OpenAI abandoned their own AI detector because it was unreliable. Pangram Labs created a specialized tool achieving 1-in-10,000 false positives—but requires institutional adoption costs.

The tools that work best for schools in 2025 are those that:

- Combine multiple detection methods

- Use AI detection as preliminary evidence, not conclusive proof

- Prioritize human oversight and student voice

- Account for demographic differences in writing patterns

- Treat AI detection as an educational conversation rather than enforcement mechanism

Teachers deserve better tools. Students deserve fair judgment. For now, both require teachers to think like skeptics, maintain curiosity about their students’ work, and recognize that the real power to detect AI lies in knowing how your students think—not in what an algorithm claims to know.

Read More:How to Create a Perfect Study Schedule Using ChatGPT for Exams.

Source: K2Think.in — India’s AI Reasoning Insight Platform.