K2-Think represents a significant paradigm shift in artificial intelligence reasoning capabilities, offering frontier-class performance with remarkable parameter efficiency. This 32-billion-parameter open-source reasoning model has emerged as a transformative technology for organizations seeking advanced problem-solving capabilities without the computational costs associated with much larger models. As businesses increasingly recognize the value of systematic reasoning over raw prediction, K2-Think has quickly demonstrated its value across diverse industry sectors, from financial services to academic research, government operations, and software development. Understanding where K2-Think delivers maximum value is essential for organizations planning to integrate advanced reasoning into their operational workflows.

Understanding K2-Think’s Technical Foundation and Competitive Advantages

K2-Think, developed by Abu Dhabi’s Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) in partnership with G42, represents an open-source breakthrough that challenges the conventional wisdom that larger always means better in AI reasoning. Built on Alibaba’s Qwen 2.5 base model, K2-Think achieves performance metrics that match or surpass systems twenty times its size, including GPT-OSS 120B and DeepSeek V3.1. This achievement stems from six technical pillars: long chain-of-thought supervised fine-tuning, reinforcement learning with verifiable rewards, agentic planning prior to reasoning, test-time scaling through best-of-N sampling, speculative decoding, and inference optimization on Cerebras wafer-scale hardware.

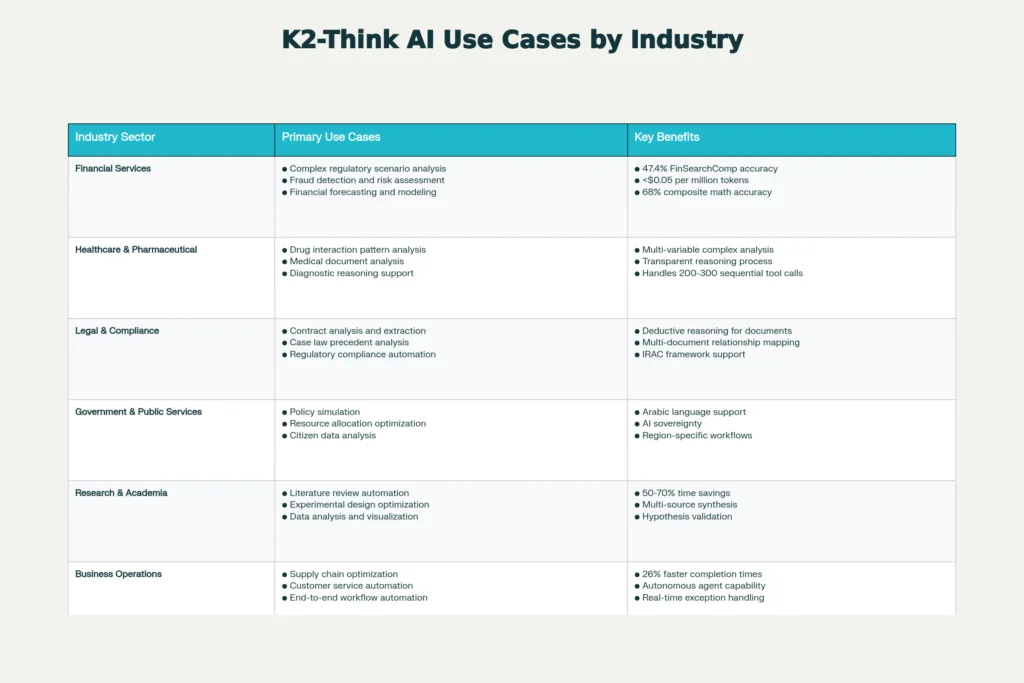

K2-Think use cases are transforming AI applications across industries.

These K2-Think use cases deliver real results in government portals, financial analysis, and research automation.

Discover the best K2-Think use cases for 2025 projects.

The model’s architecture employs a Mixture-of-Experts (MoE) approach with one trillion total parameters, activating only 32 billion parameters for any given input. This design delivers exceptional efficiency metrics: K2-Think processes approximately 2,000 tokens per second on optimal hardware, roughly ten times faster than comparable systems running on NVIDIA H100 GPUs. The inference cost remains remarkably accessible at under five cents per million tokens when deployed on Cerebras hardware, substantially lower than competing frontier models. With a native INT4 quantization providing lossless speed improvements of 2x and a 256,000-token context window enabling processing of extensive documents and codebases without chunking, K2-Think addresses critical bottlenecks that have historically limited reasoning model adoption.

Financial Services and Risk Analysis: Complex Reasoning at Scale

Financial institutions face increasingly complex regulatory requirements, multi-variable risk assessment challenges, and fraud detection scenarios that demand systematic reasoning capabilities. K2-Think delivers measurable value across these domains by enabling financial professionals to automate reasoning tasks that previously required significant expert human analysis.

Regulatory Scenario Analysis and Compliance: Financial services firms can leverage K2-Think to reason through complex regulatory scenarios, analyzing multiple regulatory frameworks simultaneously and identifying potential compliance gaps before they materialize. The model’s ability to maintain 200-300 sequential tool calls without performance degradation proves particularly valuable when integrating multiple regulatory databases, precedent systems, and compliance documentation. A financial services firm can deploy K2-Think to analyze new financial products against evolving regulatory landscapes across multiple jurisdictions, automatically cross-referencing regulatory provisions and identifying exception cases that require human review. The transparent reasoning process enables compliance teams to audit decision-making logic, a critical requirement in regulated industries where explanability matters as much as accuracy.

Fraud Detection and Financial Forecasting: K2-Think’s reasoning capabilities enable fraud detection systems to identify suspicious patterns across transaction networks by reasoning through multi-step cause-and-effect relationships. Unlike statistical approaches that may miss novel fraud patterns, K2-Think can establish causal reasoning chains, analyzing transaction sequences, temporal patterns, and behavioral anomalies to determine fraud likelihood. Financial forecasting scenarios benefit from K2-Think’s ability to integrate data from multiple sources—market data, economic indicators, historical patterns, and expert judgment—into coherent predictive models. The model achieved 47.4% on FinSearchComp benchmarks, significantly outperforming baseline systems, demonstrating its capability in financial analysis workflows. The cost advantage proves especially important for financial services: institutions deploying K2-Think incur approximately one-sixth the cost per token compared to GPT-5 and one-quarter the cost compared to Claude, enabling more extensive analysis runs and more comprehensive scenario modeling within fixed budgets.

Healthcare and Pharmaceutical Innovation: Multi-Variable Analysis for Drug Discovery

The pharmaceutical industry has historically invested enormous resources in computational drug discovery, with primary barriers being computational cost and the complexity of analyzing multi-variable biological systems. K2-Think’s accessible reasoning capabilities democratize access to sophisticated analysis typically available only to large pharmaceutical companies.

Drug Interaction and Compound Analysis: Pharmaceutical researchers can deploy K2-Think to analyze complex drug interactions by systematically evaluating molecular structures, biological pathways, and interaction mechanisms simultaneously. The model’s transparent reasoning process allows researchers to understand not just which compounds might be promising candidates, but why the model arrived at that conclusion—essential for validating computational predictions before proceeding to wet-lab validation. K2-Think’s ability to orchestrate 200-300 sequential tool calls supports multi-stage analytical workflows: molecular docking analysis, interaction scoring, pathway mapping, and literature cross-referencing can all occur within a single coherent reasoning process.

Diagnostic Reasoning Support: Healthcare systems can implement K2-Think to support diagnostic reasoning by analyzing patient data, medical histories, symptom patterns, and relevant medical literature to generate differential diagnoses and treatment recommendations. While physician oversight remains essential, K2-Think provides structured reasoning that forces systematic evaluation of all relevant clinical parameters, reducing cognitive overload and decision bias. The model’s 256,000-token context window enables analysis of extensive patient records, medical imaging reports, laboratory results, and relevant clinical guidelines simultaneously, establishing comprehensive clinical reasoning in a single pass.

Legal Technology and Compliance: Contract Analysis and Precedent Research

Legal professionals and compliance teams handle enormous document volumes where manual analysis creates bottlenecks and increases error risk. K2-Think’s reasoning capabilities dramatically accelerate legal workflows while maintaining the systematic reasoning essential for legal analysis.

Contract Analysis and Risk Identification: Law firms and corporate legal departments can deploy K2-Think to analyze contracts systematically, extracting key terms, identifying potential risks, comparing provisions against standard language, and flagging unusual clauses that require specialized attention. Unlike simple extraction tools that merely pull data from documents, K2-Think reasons through contractual language, understanding how different provisions interact and identifying second-order implications. The model excels at finding relationships and nuance across large datasets, particularly with dense, unstructured information like legal contracts that span hundreds of pages. Legal professionals note that reasoning models work particularly well when “finding a needle in the haystack”—extracting only the most relevant information from extensive contract provisions—and in “finding relationships and nuance across a large dataset,” where the model identifies parallels between documents and draws conclusions based on implicit patterns in the data.

Precedent Analysis and Legal Reasoning: Legal researchers can use K2-Think to search through case law databases, analyzing precedent relationships, identifying supporting and contradictory precedents, and establishing legal reasoning chains that connect multiple cases to current questions. The model’s transparent reasoning process enables lawyers to see exactly which precedents influenced conclusions and how legal logic chains together, supporting compelling legal argumentation. Researchers have developed legal reasoning benchmarks specifically for evaluating model performance on multi-step legal analysis, with K2-Think demonstrating strong performance on IRAC (Issue-Rule-Application-Conclusion) framework tasks—the standard approach for legal reasoning.

Government Services and Policy Analysis: Data-Driven Decision Making

Government agencies increasingly need sophisticated analytical tools to optimize public services, allocate resources efficiently, and predict policy outcomes. K2-Think’s open-source nature, affordability, and transparency make it particularly suitable for government deployments where AI sovereignty and accountability matter significantly.

Policy Simulation and Impact Analysis: Government agencies can deploy K2-Think to simulate policy scenarios, reasoning through how policy changes would likely affect different populations, economic sectors, and geographic regions. The model’s reasoning transparency proves especially valuable in government contexts where decision-making must withstand public scrutiny and policy impacts affect millions of citizens. Officials can examine the reasoning process to ensure policy analysis considered all relevant factors and didn’t inadvertently introduce bias.

Resource Allocation and Budget Optimization: K2-Think enables governments to analyze resource allocation scenarios more systematically, considering competing needs, geographic distribution requirements, seasonal variations, and economic multiplier effects. Budget officers can reason through trade-offs between different allocation strategies, understanding second-order consequences of allocation decisions. The model’s support for Arabic language workflows and region-specific policy ontologies makes it particularly valuable for Middle Eastern and North African governments seeking to deploy AI systems that reflect regional governance priorities.

Research and Academic Applications: Accelerating Discovery

Academic researchers face exponential growth in scholarly publications, making comprehensive literature reviews increasingly difficult while systematic analysis becomes more valuable. K2-Think addresses this challenge by automating the most time-consuming aspects of research workflows.

Literature Review Automation: Research teams can deploy K2-Think to accelerate systematic literature reviews, automating discovery, initial screening, data extraction, and synthesis stages that traditionally consume months of human effort. The model systematically analyzes abstracts and full texts, extracting structured data according to predetermined extraction templates, identifying contradictions across studies, and synthesizing findings from multiple papers into coherent frameworks. Researchers report 50-70% time savings on data extraction and initial synthesis tasks, enabling projects that might traditionally require 8-12 weeks to be completed in 3-4 weeks with AI assistance. K2-Think’s ability to maintain context across multiple documents and recognize relationships between studies proves particularly valuable when synthesizing findings across large literature corpora.

Experimental Design Optimization: Research teams can use K2-Think to reason through experimental design decisions, considering multiple variables simultaneously and predicting potential confounding factors. The model’s reasoning transparency enables researchers to understand the logic behind design recommendations and validate them against their domain expertise. For empirical research involving hypothesis testing, K2-Think can structure validation logic, suggesting counter-evidence to test and helping researchers anticipate challenges before expensive experimental work begins.

Research Report Generation: Academic researchers can leverage K2-Think to draft comprehensive research reports, integrating findings from multiple sources with proper citation attribution and structured reasoning about findings. The model’s superior performance on writing tasks—achieving approximately 73.8% on long-form writing benchmarks—combined with strong reasoning capabilities enables generation of technically sophisticated academic content with minimal editing. The 30% reduction in factual errors when using research-first reasoning patterns makes K2-Think particularly valuable for creating literature reviews where accuracy matters critically.

Business Operations and Supply Chain Automation: Autonomous Reasoning

Enterprise operations increasingly demand autonomous decision-making across complex, multi-step workflows. K2-Think enables organizations to deploy reasoning agents capable of independent problem-solving with human oversight for exception cases.

Supply Chain Optimization: Organizations can deploy K2-Think-powered autonomous agents to identify supply chain anomalies, analyze root causes, and propose solutions without human intervention at each step. These agents continuously monitor supply chain data, detect disruptions from geopolitical events or demand fluctuations, and automatically reroute shipments or adjust inventory levels based on systematic reasoning about supply chain constraints. Human oversight remains available for high-risk decisions, but routine optimization occurs autonomously. GlobalLogistics Inc.’s Intelligent Supply Chain Optimizer case study demonstrates how K2-Think enables autonomous analysis of complex logistics scenarios, detecting patterns invisible to reactive systems.

Customer Service Automation: Customer service organizations can implement K2-Think-powered agents that handle end-to-end resolution processes, accessing ERP systems, CRM databases, logistics portals, and finance systems to resolve customer issues without manual routing. These agents understand ambiguous customer requests, navigate multiple backend systems, handle exceptions gracefully, and escalate complex cases to human agents with complete context already gathered. K2-Think’s 26% performance improvement over baseline models lacking automatic retries—measured in completion times for batch enrichment tasks—translates directly into faster customer resolutions and reduced operational costs.

Intelligent Workflow Orchestration: Business operations teams can use K2-Think to orchestrate complex multi-step business processes, automatically determining which workflows to trigger, coordinating across departments, and handling exception cases intelligently. The model’s capacity for extended tool orchestration—maintaining performance across 200-300 sequential tool calls where most models degrade after 30-50 steps—enables coordination of processes far more complex than traditional workflow management systems can handle.

Content Creation and Publishing: SEO-Optimized Generation

Content creation organizations seeking to maintain publishing volumes while improving quality and consistency benefit substantially from K2-Think’s reasoning-enhanced writing capabilities.

SEO-Optimized Article Generation: Content teams can deploy K2-Think to generate comprehensive, SEO-optimized articles by reasoning through content strategy, research requirements, keyword integration, and structural organization before generating content. The model’s writing-first reasoning pattern enables 15-20% faster article generation compared to research-first patterns, while maintaining strong factual accuracy when combined with appropriate validation steps. K2-Think’s 256,000-token context window enables simultaneous processing of extensive research materials, competitive analysis, and style guidelines, maintaining consistency across large content corpora.

Data-Aware Writing with Citation: Publishers requiring cited content can leverage K2-Think’s structured output capabilities to generate research-backed articles with automatic citation attribution. The model reasons through available sources, tracks source origins, and integrates citations seamlessly into narrative text, enabling creation of complex documents like white papers, research reports, and comprehensive guides that would require extensive manual work to properly cite and verify.

Content Planning and Strategy: Content teams can use K2-Think to develop comprehensive content strategies by reasoning through audience needs, competitive positioning, keyword opportunities, and content gaps simultaneously. The model’s planning-before-execution approach ensures content strategies address audience needs systematically rather than opportunistically.

Read About : K2-Think Prompt Engineering: Proven Examples

Software Development and Code Analysis: Production-Level Development Support

Software development teams can leverage K2-Think’s strong coding performance to accelerate development workflows, automate testing, and improve code quality.

Code Analysis and Debugging: Development teams can deploy K2-Think to analyze complex codebases, identify bugs through systematic reasoning about program logic, and suggest fixes. The model achieved 71.3% on SWE-Bench Verified, placing it among the leading systems for production-level coding tasks. K2-Think’s reasoning transparency proves particularly valuable for code review, enabling developers to understand the logic behind suggested improvements or security warnings.

Multi-Step Development Workflows: K2-Think can handle complex development projects spanning ideation to functional products, maintaining coherent reasoning across hundreds of development steps. The model can propose architectures, identify potential issues, generate component code, coordinate across multiple files, and validate functionality—all within a single reasoning process that respects software engineering constraints and best practices.

Performance: K2-Think achieved 83.1% on LiveCodeBench v6 and 48.7% on OJ-Bench (C++), demonstrating elite performance on competitive programming tasks that demand systematic algorithmic reasoning.

Deploying K2-Think: Implementation Considerations and Best Practices

Organizations implementing K2-Think should consider deployment models, infrastructure requirements, and integration patterns that optimize value realization.

On-Premise Deployment: K2-Think can be deployed on organization infrastructure using frameworks like SGLang and vLLM, running on Kubernetes clusters with NVIDIA GPUs for high-performance inference. This deployment model ensures data stays within organizational infrastructure, addressing concerns about sensitive data accessing external APIs. Organizations can manage scaling based on workload patterns and integrate K2-Think with existing infrastructure and authentication systems.

Performance Optimization: While K2-Think runs efficiently on Cerebras hardware achieving 2,000 tokens per second, organizations using standard GPU infrastructure should implement speculative decoding and batching strategies to maximize throughput. The native INT4 quantization reduces memory requirements by 50% compared to FP16, enabling deployment on more modest hardware.

Human-in-the-Loop Design: Most effective K2-Think implementations incorporate human oversight for critical decisions, using K2-Think for initial analysis, reasoning, and exception identification while reserving final decisions for domain experts. This approach combines the systematic reasoning benefits of K2-Think with human judgment, creating organizational resilience against potential model errors.

Comparative Advantages and Competitive Positioning

K2-Think’s positioning relative to competing reasoning systems affects where organizations should prioritize its deployment.

Compared to GPT-5, K2-Think excels at long, tool-heavy workflows where tool orchestration and extended autonomous operation matter more than free-form creativity. Organizations prioritizing cost efficiency—where K2-Think costs approximately one-quarter the price of GPT-5—benefit substantially from deployment in cost-sensitive workflows. K2-Think’s transparency—exposing complete reasoning processes through dedicated API fields—advantages domains requiring explainability like legal services and healthcare.

Versus Claude systems, K2-Think offers superior performance on mathematical reasoning (99.1% on AIME 2025 with Python tools versus Claude’s strong but lower performance) and significantly lower cost. K2-Think’s native tool orchestration trained from the ground up provides more stable extended workflows compared to Claude’s strong but bolt-on agentic capabilities.

Against open-source alternatives like DeepSeek, K2-Think’s completely transparent training process—documenting post-training techniques for reasoning development—enables reproducibility and community improvement. The two thousand tokens per second throughput on Cerebras hardware substantially outpaces publicly available benchmark results for competing open-source models.

Emerging Opportunities and Future Directions

K2-Think’s trajectory suggests expanding applications across additional domains as the reasoning model ecosystem matures. The model’s Arabic language capabilities and region-specific customization features position it well for government and enterprise adoption across Middle Eastern economies. Academic institutions are beginning to explore K2-Think for specialized reasoning tasks in domains like quantum computing simulation, financial modeling, and policy analysis. Healthcare institutions increasingly consider K2-Think for diagnostic decision support systems, where the transparent reasoning process provides clinicians confidence in model outputs.

Organizations that have invested in reasoning model experimentation report discovering unexpected high-value use cases as teams become familiar with model capabilities. Support ticket analysis automation, risk assessment workflows, and anomaly detection systems often emerge as surprising high-value applications after initial deployment.

Conclusion

K2-Think represents a meaningful advancement in accessible reasoning technology, combining frontier-class reasoning performance with parameter efficiency, transparency, and affordability that democratizes access to advanced reasoning capabilities. The model’s best use cases span financial analysis, pharmaceutical research, legal document processing, government policy simulation, academic research acceleration, autonomous supply chain management, content creation, and software development—essentially any domain where systematic, multi-step reasoning about complex information creates competitive advantage.

Organizations should prioritize K2-Think deployment for high-volume workflows where reasoning quality has measurable impact, where tool orchestration and autonomous operation reduce labor costs, and where transparency and explainability matter for stakeholder trust. The model’s exceptional parameter efficiency and transparent reasoning process make it particularly valuable for organizations managing sensitive data where explainability and reproducibility drive technology adoption decisions.

The rapid adoption of K2-Think across diverse sectors confirms that the next phase of enterprise AI will prioritize intelligent reasoning over raw prediction, systematic analysis over pattern matching, and transparent decision-making over black-box optimization. Organizations recognizing this shift and deploying K2-Think strategically position themselves to extract maximum value from advanced reasoning capabilities as AI reasoning becomes as essential to enterprise operations as data analytics has become over the past decade.

Source: K2Think.in — India’s AI Reasoning Insight Platform.